add exp 4/5

This commit is contained in:

parent

74c2d8afa2

commit

3f7b3ad467

Binary file not shown.

|

After Width: | Height: | Size: 51 KiB |

Binary file not shown.

|

After Width: | Height: | Size: 275 KiB |

Binary file not shown.

|

After Width: | Height: | Size: 44 KiB |

Binary file not shown.

|

After Width: | Height: | Size: 30 KiB |

Binary file not shown.

|

After Width: | Height: | Size: 44 KiB |

Binary file not shown.

|

After Width: | Height: | Size: 128 KiB |

|

|

@ -54,6 +54,11 @@ allsides.com <!-- .element: class="fragment" -->

|

||||||

|

|

||||||

huggingface.com <!-- .element: class="fragment" -->

|

huggingface.com <!-- .element: class="fragment" -->

|

||||||

|

|

||||||

|

Note:

|

||||||

|

Let's get a handle on the shape of the data.

|

||||||

|

|

||||||

|

The sources, size, and features of the data.

|

||||||

|

|

||||||

===

|

===

|

||||||

|

|

||||||

<section data-background-iframe="https://www.memeorandum.com" data-background-interactive></section>

|

<section data-background-iframe="https://www.memeorandum.com" data-background-interactive></section>

|

||||||

|

|

@ -128,7 +133,7 @@ huggingface.com <!-- .element: class="fragment" -->

|

||||||

===

|

===

|

||||||

|

|

||||||

# Data Structures

|

# Data Structures

|

||||||

Stories

|

## Stories

|

||||||

|

|

||||||

- Top level stories. <!-- .element: class="fragment" -->

|

- Top level stories. <!-- .element: class="fragment" -->

|

||||||

- title.

|

- title.

|

||||||

|

|

@ -142,7 +147,7 @@ Stories

|

||||||

==

|

==

|

||||||

|

|

||||||

# Data Structures

|

# Data Structures

|

||||||

Bias

|

## Bias

|

||||||

|

|

||||||

- Per publisher. <!-- .element: class="fragment" -->

|

- Per publisher. <!-- .element: class="fragment" -->

|

||||||

- name.

|

- name.

|

||||||

|

|

@ -153,7 +158,7 @@ Bias

|

||||||

==

|

==

|

||||||

|

|

||||||

# Data Structures

|

# Data Structures

|

||||||

Embeddings

|

## Embeddings

|

||||||

|

|

||||||

- Per story title. <!-- .element: class="fragment" -->

|

- Per story title. <!-- .element: class="fragment" -->

|

||||||

- sentence embedding (n, 384).

|

- sentence embedding (n, 384).

|

||||||

|

|

@ -169,7 +174,7 @@ Embeddings

|

||||||

|

|

||||||

# Data Collection

|

# Data Collection

|

||||||

|

|

||||||

Story Scraper (simplified)

|

## Story Scraper (simplified)

|

||||||

|

|

||||||

```python

|

```python

|

||||||

day = timedelta(days=1)

|

day = timedelta(days=1)

|

||||||

|

|

@ -187,7 +192,8 @@ while cur <= end:

|

||||||

==

|

==

|

||||||

|

|

||||||

# Data Collection

|

# Data Collection

|

||||||

Bias Scraper (hard)

|

|

||||||

|

## Bias Scraper (hard)

|

||||||

|

|

||||||

```python

|

```python

|

||||||

...

|

...

|

||||||

|

|

@ -206,14 +212,16 @@ for row in rows:

|

||||||

==

|

==

|

||||||

|

|

||||||

# Data Collection

|

# Data Collection

|

||||||

Bias Scraper (easy)

|

|

||||||

|

## Bias Scraper (easy)

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

==

|

==

|

||||||

|

|

||||||

# Data Collection

|

# Data Collection

|

||||||

Embeddings (easy)

|

|

||||||

|

## Embeddings (easy)

|

||||||

|

|

||||||

```python

|

```python

|

||||||

# table = ...

|

# table = ...

|

||||||

|

|

@ -230,7 +238,8 @@ for chunk in table:

|

||||||

==

|

==

|

||||||

|

|

||||||

# Data Collection

|

# Data Collection

|

||||||

Classification Embeddings (medium)

|

|

||||||

|

## Classification Embeddings (medium)

|

||||||

|

|

||||||

```python

|

```python

|

||||||

...

|

...

|

||||||

|

|

@ -249,7 +258,8 @@ for i, class_id in enumerate(class_ids):

|

||||||

==

|

==

|

||||||

|

|

||||||

# Data Selection

|

# Data Selection

|

||||||

Stories

|

|

||||||

|

## Stories

|

||||||

|

|

||||||

- Clip the first and last full year of stories. <!-- .element: class="fragment" -->

|

- Clip the first and last full year of stories. <!-- .element: class="fragment" -->

|

||||||

- Remove duplicate stories (big stories span multiple days). <!-- .element: class="fragment" -->

|

- Remove duplicate stories (big stories span multiple days). <!-- .element: class="fragment" -->

|

||||||

|

|

@ -257,7 +267,7 @@ Stories

|

||||||

==

|

==

|

||||||

# Data Selection

|

# Data Selection

|

||||||

|

|

||||||

Publishers

|

## Publishers

|

||||||

|

|

||||||

- Combine subdomains of stories. <!-- .element: class="fragment" -->

|

- Combine subdomains of stories. <!-- .element: class="fragment" -->

|

||||||

- blog.washingtonpost.com and washingtonpost.com are considered the same publisher.

|

- blog.washingtonpost.com and washingtonpost.com are considered the same publisher.

|

||||||

|

|

@ -267,7 +277,7 @@ Publishers

|

||||||

|

|

||||||

# Data Selection

|

# Data Selection

|

||||||

|

|

||||||

Links

|

## Links

|

||||||

|

|

||||||

- Select only stories with publishers whose story had been a 'parent' ('original publishers'). <!-- .element: class="fragment" -->

|

- Select only stories with publishers whose story had been a 'parent' ('original publishers'). <!-- .element: class="fragment" -->

|

||||||

- Eliminates small blogs and non-original news.

|

- Eliminates small blogs and non-original news.

|

||||||

|

|

@ -279,7 +289,7 @@ Links

|

||||||

|

|

||||||

# Data Selection

|

# Data Selection

|

||||||

|

|

||||||

Bias

|

## Bias

|

||||||

|

|

||||||

- Keep all ratings, even ones with low agree/disagree ratio.

|

- Keep all ratings, even ones with low agree/disagree ratio.

|

||||||

- Join datasets on publisher name.

|

- Join datasets on publisher name.

|

||||||

|

|

@ -292,7 +302,7 @@ Bias

|

||||||

|

|

||||||

# Descriptive Stats

|

# Descriptive Stats

|

||||||

|

|

||||||

Raw

|

## Raw

|

||||||

|

|

||||||

| metric | value |

|

| metric | value |

|

||||||

|:------------------|--------:|

|

|:------------------|--------:|

|

||||||

|

|

@ -307,7 +317,7 @@ Raw

|

||||||

==

|

==

|

||||||

# Descriptive Stats

|

# Descriptive Stats

|

||||||

|

|

||||||

Stories Per Publisher

|

## Stories Per Publisher

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

@ -315,7 +325,7 @@ Stories Per Publisher

|

||||||

|

|

||||||

# Descriptive Stats

|

# Descriptive Stats

|

||||||

|

|

||||||

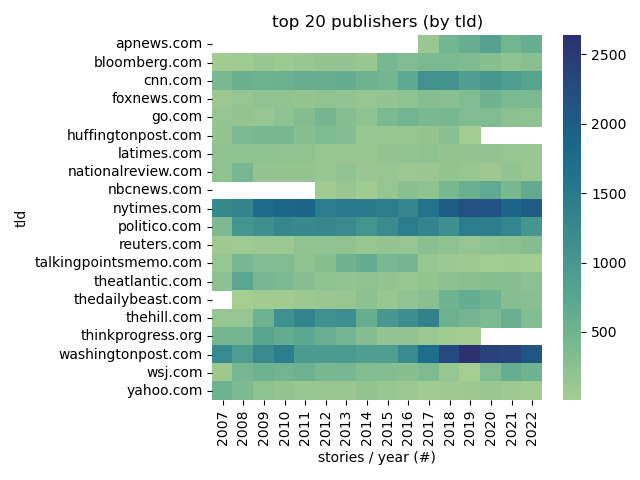

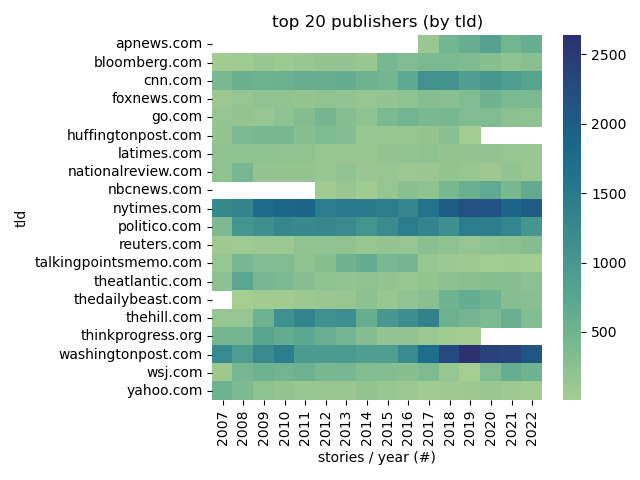

Top Publishers

|

## Top Publishers

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

@ -323,7 +333,7 @@ Top Publishers

|

||||||

|

|

||||||

# Descriptive Stats

|

# Descriptive Stats

|

||||||

|

|

||||||

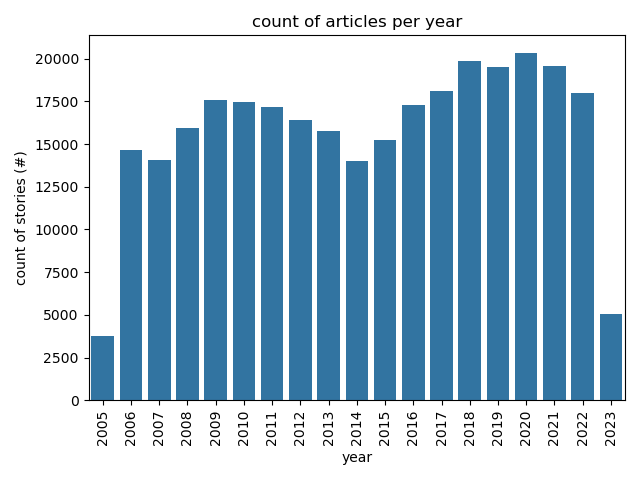

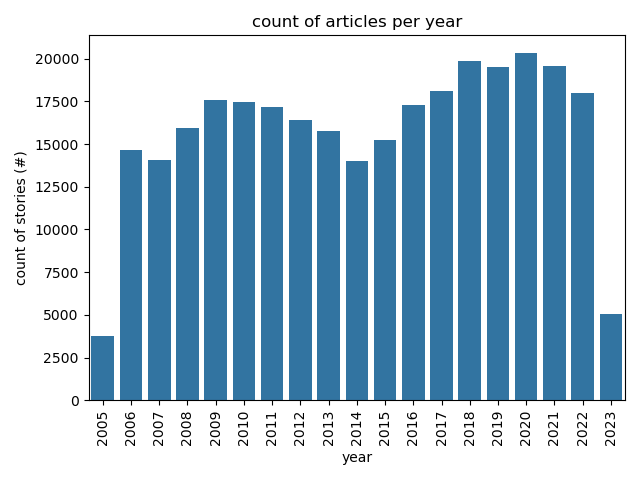

Articles Per Year

|

## Articles Per Year

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

@ -331,7 +341,7 @@ Articles Per Year

|

||||||

|

|

||||||

# Descriptive Stats

|

# Descriptive Stats

|

||||||

|

|

||||||

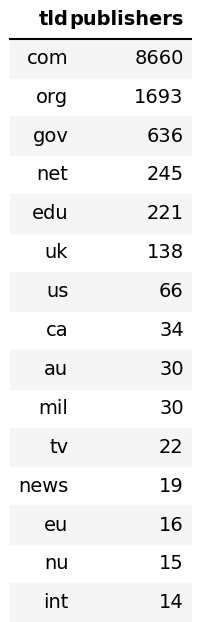

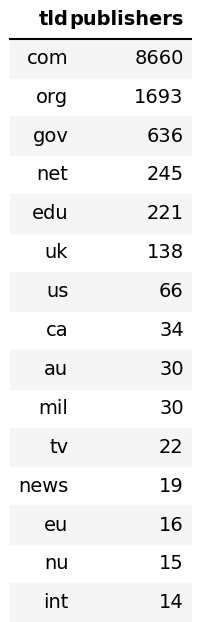

Common TLDs

|

## Common TLDs

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

@ -339,9 +349,9 @@ Common TLDs

|

||||||

|

|

||||||

# Descriptive Stats

|

# Descriptive Stats

|

||||||

|

|

||||||

Post Process

|

## Post Process

|

||||||

|

|

||||||

| key | value |

|

| metric | value |

|

||||||

|:------------------|--------:|

|

|:------------------|--------:|

|

||||||

| total stories | 251553 |

|

| total stories | 251553 |

|

||||||

| total related | 815183 |

|

| total related | 815183 |

|

||||||

|

|

@ -352,6 +362,7 @@ Post Process

|

||||||

| top level domains | 234 |

|

| top level domains | 234 |

|

||||||

|

|

||||||

===

|

===

|

||||||

|

|

||||||

# Experiments

|

# Experiments

|

||||||

|

|

||||||

1. **clustering** on link similarity. <!-- .element: class="fragment" -->

|

1. **clustering** on link similarity. <!-- .element: class="fragment" -->

|

||||||

|

|

@ -361,9 +372,16 @@ Post Process

|

||||||

5. **regression** on emotional classification over time and publication. <!-- .element: class="fragment" -->

|

5. **regression** on emotional classification over time and publication. <!-- .element: class="fragment" -->

|

||||||

|

|

||||||

===

|

===

|

||||||

|

|

||||||

# Experiment 1

|

# Experiment 1

|

||||||

|

|

||||||

Setup

|

**clustering** on link similarity.

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 1

|

||||||

|

|

||||||

|

## Setup

|

||||||

|

|

||||||

- Create one-hot encoding of links between publishers. <!-- .element: class="fragment" -->

|

- Create one-hot encoding of links between publishers. <!-- .element: class="fragment" -->

|

||||||

- Cluster the encoding. <!-- .element: class="fragment" -->

|

- Cluster the encoding. <!-- .element: class="fragment" -->

|

||||||

|

|

@ -379,7 +397,7 @@ Principle Component Analysis:

|

||||||

|

|

||||||

# Experiment 1

|

# Experiment 1

|

||||||

|

|

||||||

One Hot Encoding

|

## One Hot Encoding

|

||||||

|

|

||||||

| publisher | nytimes| wsj| newsweek| ...|

|

| publisher | nytimes| wsj| newsweek| ...|

|

||||||

|:----------|--------:|----:|--------:|----:|

|

|:----------|--------:|----:|--------:|----:|

|

||||||

|

|

@ -392,7 +410,7 @@ One Hot Encoding

|

||||||

|

|

||||||

# Experiment 1

|

# Experiment 1

|

||||||

|

|

||||||

n-Hot Encoding

|

## n-Hot Encoding

|

||||||

|

|

||||||

| publisher | nytimes| wsj| newsweek| ...|

|

| publisher | nytimes| wsj| newsweek| ...|

|

||||||

|:----------|--------:|----:|--------:|----:|

|

|:----------|--------:|----:|--------:|----:|

|

||||||

|

|

@ -405,7 +423,7 @@ n-Hot Encoding

|

||||||

|

|

||||||

# Experiment 1

|

# Experiment 1

|

||||||

|

|

||||||

Normalized n-Hot Encoding

|

## Normalized n-Hot Encoding

|

||||||

|

|

||||||

| publisher | nytimes| wsj| newsweek| ...|

|

| publisher | nytimes| wsj| newsweek| ...|

|

||||||

|:----------|--------:|----:|--------:|----:|

|

|:----------|--------:|----:|--------:|----:|

|

||||||

|

|

@ -418,7 +436,7 @@ Normalized n-Hot Encoding

|

||||||

|

|

||||||

# Experiment 1

|

# Experiment 1

|

||||||

|

|

||||||

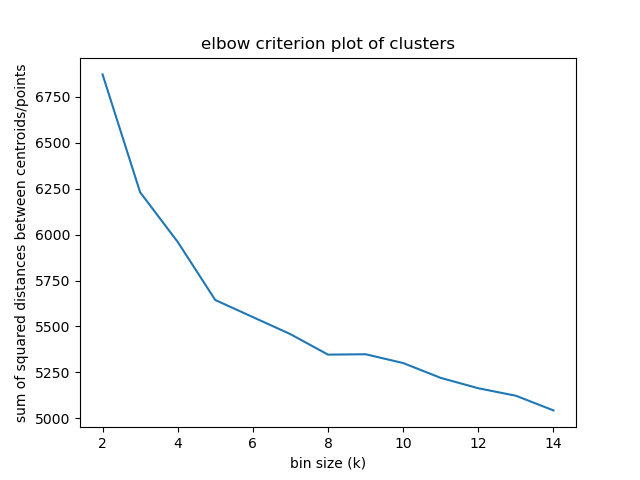

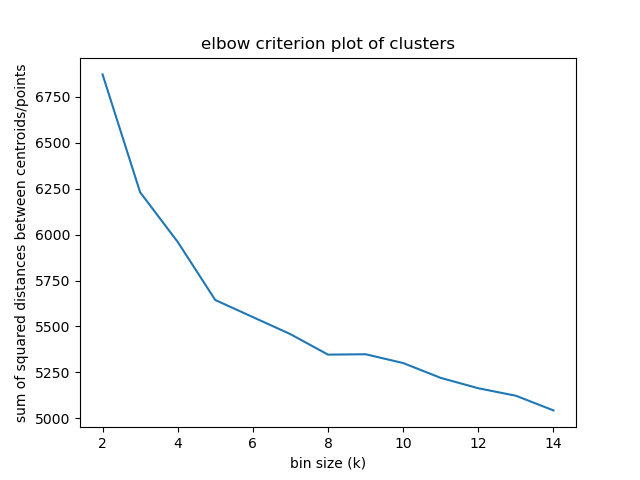

Elbow criterion

|

## Elbow criterion

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

@ -434,7 +452,7 @@ Percentage of variance explained is the ratio of the between-group variance to t

|

||||||

|

|

||||||

# Experiment 1

|

# Experiment 1

|

||||||

|

|

||||||

Link Magnitude

|

## Link Magnitude

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

@ -442,7 +460,7 @@ Link Magnitude

|

||||||

|

|

||||||

# Experiment 1

|

# Experiment 1

|

||||||

|

|

||||||

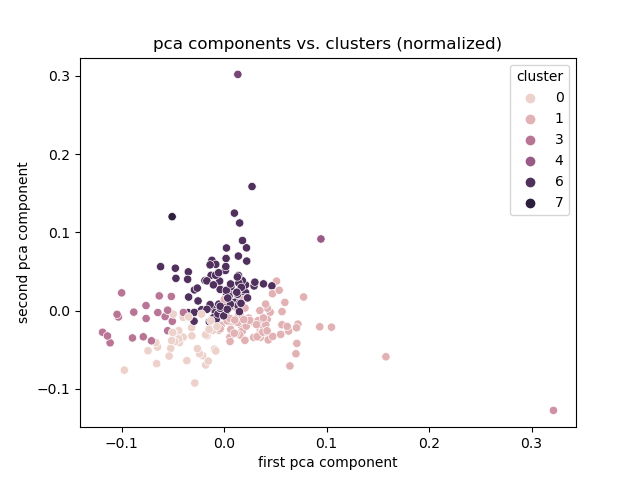

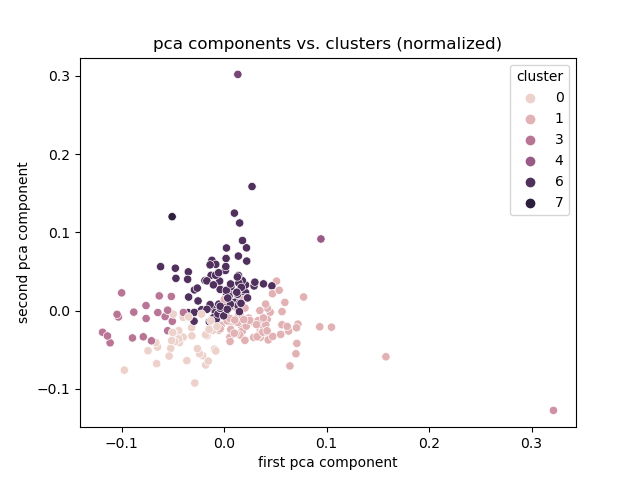

Normalized

|

## Normalized

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

@ -450,7 +468,7 @@ Normalized

|

||||||

|

|

||||||

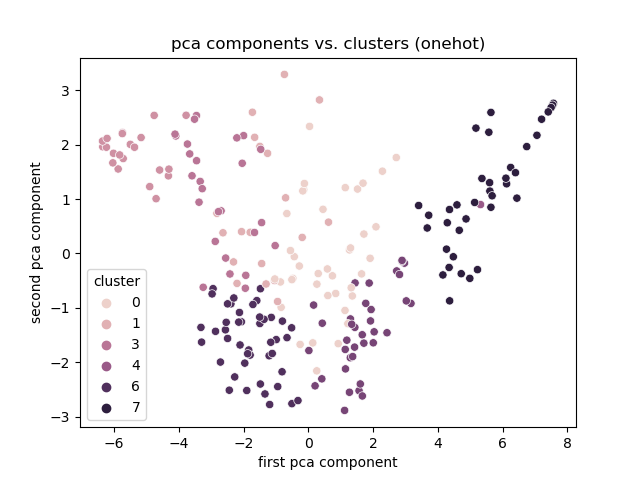

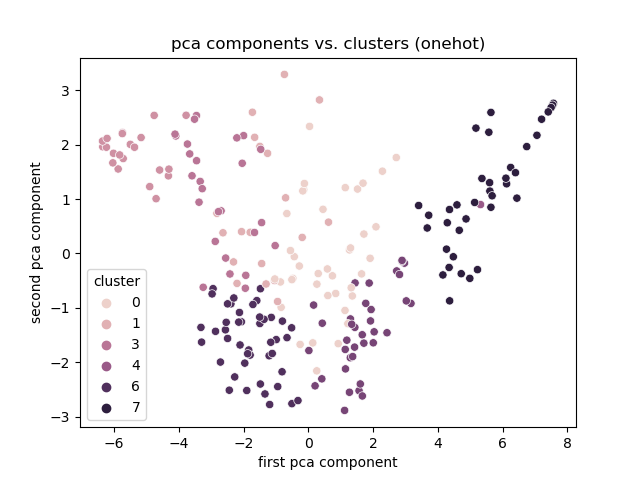

# Experiment 1

|

# Experiment 1

|

||||||

|

|

||||||

Onehot

|

## One Hot

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

@ -458,20 +476,27 @@ Onehot

|

||||||

|

|

||||||

# Experiment 1

|

# Experiment 1

|

||||||

|

|

||||||

Discussion

|

## Discussion

|

||||||

|

|

||||||

- Best encoding: One hot. <!-- .element: class="fragment" -->

|

- Best encoding: One hot. <!-- .element: class="fragment" -->

|

||||||

- Clusters based on total links otherwise.

|

- Clusters, but no explanation. <!-- .element: class="fragment" -->

|

||||||

- Clusters, but no explanation

|

- Limitation: need the link encoding to cluster. <!-- .element: class="fragment" -->

|

||||||

- Limitation: need the link encoding to cluster.

|

|

||||||

- Smaller publishers might not link very much.

|

- Smaller publishers might not link very much.

|

||||||

|

- TODO: Association Rule Mining. <!-- .element: class="fragment" -->

|

||||||

|

|

||||||

===

|

===

|

||||||

|

|

||||||

# Experiment 2

|

# Experiment 2

|

||||||

|

|

||||||

Setup

|

**classification** on link similarity.

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 2

|

||||||

|

|

||||||

|

## Setup

|

||||||

|

|

||||||

|

- **clustering**. <!-- .element: class="fragment" -->

|

||||||

- Create features. <!-- .element: class="fragment" -->:

|

- Create features. <!-- .element: class="fragment" -->:

|

||||||

- Publisher frequency.

|

- Publisher frequency.

|

||||||

- Reuse link encodings.

|

- Reuse link encodings.

|

||||||

|

|

@ -483,7 +508,8 @@ Note:

|

||||||

|

|

||||||

==

|

==

|

||||||

# Experiment 2

|

# Experiment 2

|

||||||

Descriptive stats

|

|

||||||

|

## Descriptive stats

|

||||||

|

|

||||||

| metric | value |

|

| metric | value |

|

||||||

|:------------|:----------|

|

|:------------|:----------|

|

||||||

|

|

@ -498,7 +524,7 @@ Descriptive stats

|

||||||

|

|

||||||

# Experiment 2

|

# Experiment 2

|

||||||

|

|

||||||

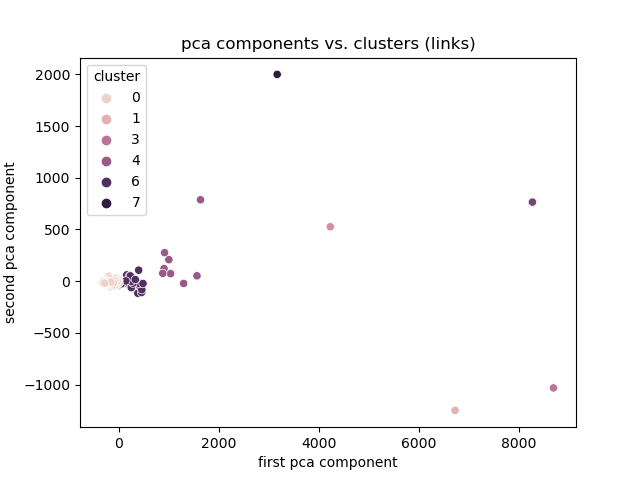

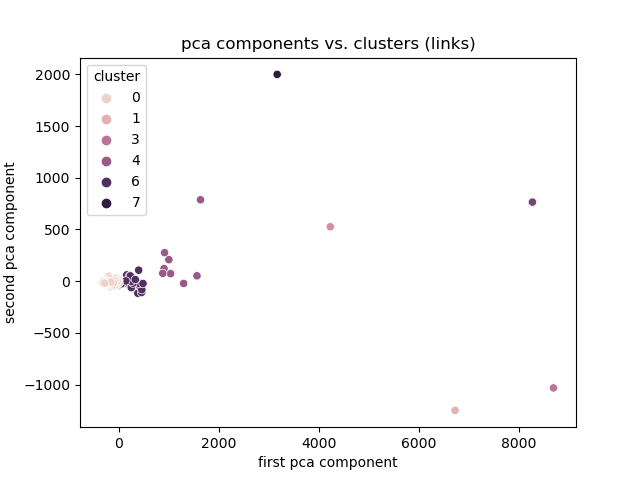

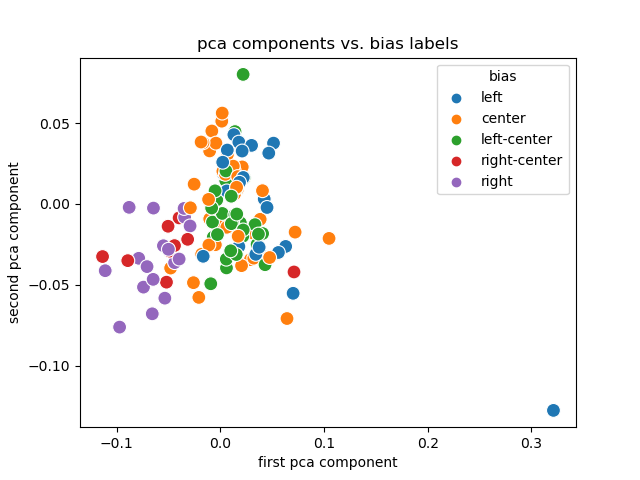

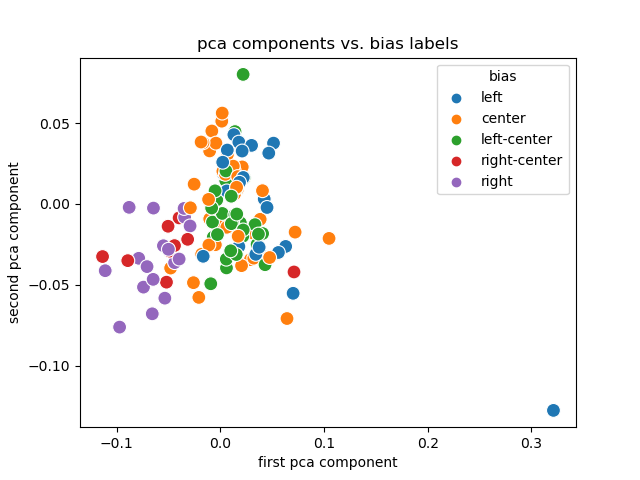

PCA + Labels

|

## PCA + Labels

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

@ -506,7 +532,7 @@ PCA + Labels

|

||||||

|

|

||||||

# Experiment 2

|

# Experiment 2

|

||||||

|

|

||||||

Discussion

|

## Discussion

|

||||||

|

|

||||||

- Link encodings (and their PCA) are useful. <!-- .element: class="fragment" -->

|

- Link encodings (and their PCA) are useful. <!-- .element: class="fragment" -->

|

||||||

- Labels are (sort of) separated and clustered.

|

- Labels are (sort of) separated and clustered.

|

||||||

|

|

@ -515,7 +541,7 @@ Discussion

|

||||||

|

|

||||||

# Experiment 2

|

# Experiment 2

|

||||||

|

|

||||||

Limitations

|

## Limitations

|

||||||

|

|

||||||

- Dependent on accurate rating. <!-- .element: class="fragment" -->

|

- Dependent on accurate rating. <!-- .element: class="fragment" -->

|

||||||

- Ordinal ratings not available. <!-- .element: class="fragment" -->

|

- Ordinal ratings not available. <!-- .element: class="fragment" -->

|

||||||

|

|

@ -525,13 +551,260 @@ Limitations

|

||||||

|

|

||||||

===

|

===

|

||||||

|

|

||||||

# Experiment 3

|

# Experiment 3

|

||||||

|

|

||||||

Setup

|

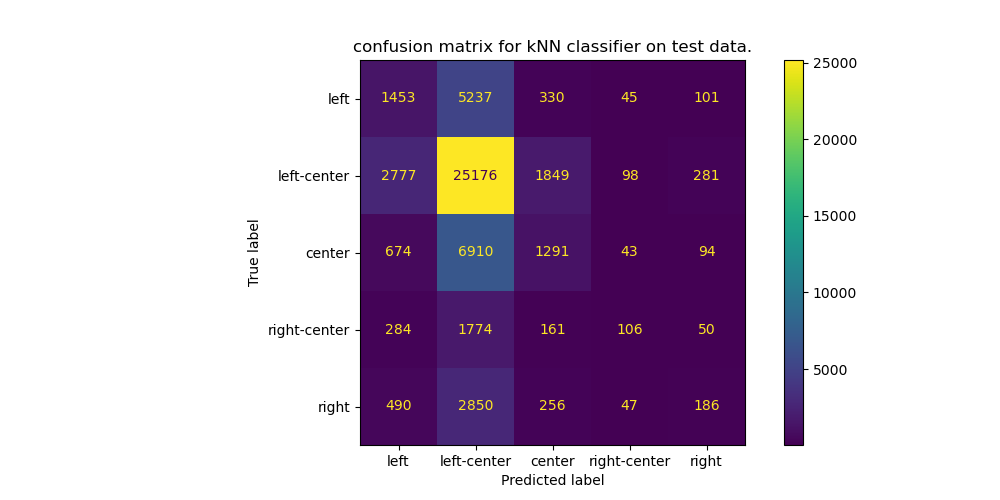

**classification** on sentence embedding.

|

||||||

|

|

||||||

==

|

==

|

||||||

|

|

||||||

# Limitations

|

# Experiment 3

|

||||||

|

|

||||||

|

## Setup

|

||||||

|

|

||||||

|

|

||||||

|

- **classification**. <!-- .element: class="fragment" -->

|

||||||

|

- Generate sentence embedding for each title. <!-- .element: class="fragment" -->

|

||||||

|

- Rerun PCA analysis on title embeddings. <!-- .element: class="fragment" -->

|

||||||

|

- Use kNN classifier to map embedding features to bias rating. <!-- .element: class="fragment" -->

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 3

|

||||||

|

|

||||||

|

## Sentence Embeddings

|

||||||

|

|

||||||

|

1. Extract titles.

|

||||||

|

2. Tokenize titles.

|

||||||

|

3. Pick pretrained Language Model.

|

||||||

|

4. Generate embeddings from tokens.

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 3

|

||||||

|

|

||||||

|

## Tokens

|

||||||

|

|

||||||

|

**The sentence:**

|

||||||

|

|

||||||

|

"Spain, Land of 10 P.M. Dinners, Asks if It's Time to Reset Clock"

|

||||||

|

|

||||||

|

**Tokenizes to:**

|

||||||

|

|

||||||

|

```

|

||||||

|

['[CLS]', 'spain', ',', 'land', 'of', '10', 'p', '.', 'm', '.',

|

||||||

|

'dinners', ',', 'asks', 'if', 'it', "'", 's', 'time', 'to',

|

||||||

|

'reset', 'clock', '[SEP]']

|

||||||

|

```

|

||||||

|

|

||||||

|

Note:

|

||||||

|

[CLS] is unique to BERT models and stands for classification.

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 3

|

||||||

|

|

||||||

|

## Tokens

|

||||||

|

|

||||||

|

**The sentence:**

|

||||||

|

|

||||||

|

"NPR/PBS NewsHour/Marist Poll Results and Analysis"

|

||||||

|

|

||||||

|

**Tokenizes to:**

|

||||||

|

|

||||||

|

```

|

||||||

|

['[CLS]', 'npr', '/', 'pbs', 'news', '##ho', '##ur', '/', 'maris',

|

||||||

|

'##t', 'poll', 'results', 'and', 'analysis', '[SEP]', '[PAD]',

|

||||||

|

'[PAD]', '[PAD]', '[PAD]', '[PAD]', '[PAD]', '[PAD]']

|

||||||

|

```

|

||||||

|

|

||||||

|

Note:

|

||||||

|

The padding is there to make all tokenized vectors equal length.

|

||||||

|

|

||||||

|

The tokenizer also outputs a mask vector that the language model uses to ignore the padding.

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 3

|

||||||

|

|

||||||

|

## Embeddings

|

||||||

|

|

||||||

|

- Using a BERT (Bidirectional Encoder Representations from Transformers) based model.

|

||||||

|

- Input: tokens.

|

||||||

|

- Output: dense vectors representing 'semantic meaning' of tokens.

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 3

|

||||||

|

|

||||||

|

## Embeddings

|

||||||

|

|

||||||

|

**The tokens:**

|

||||||

|

|

||||||

|

```

|

||||||

|

['[CLS]', 'npr', '/', 'pbs', 'news', '##ho', '##ur', '/', 'maris',

|

||||||

|

'##t', 'poll', 'results', 'and', 'analysis', '[SEP]', '[PAD]',

|

||||||

|

'[PAD]', '[PAD]', '[PAD]', '[PAD]', '[PAD]', '[PAD]']

|

||||||

|

```

|

||||||

|

|

||||||

|

**Embeds to a vector (1, 384):**

|

||||||

|

|

||||||

|

```

|

||||||

|

array([[ 0.12444635, -0.05962477, -0.00127911, ..., 0.13943022,

|

||||||

|

-0.2552534 , -0.00238779],

|

||||||

|

[ 0.01535596, -0.05933844, -0.0099495 , ..., 0.48110735,

|

||||||

|

0.1370568 , 0.3285091 ],

|

||||||

|

[ 0.2831368 , -0.4200529 , 0.10879617, ..., 0.15663117,

|

||||||

|

-0.29782432, 0.4289513 ],

|

||||||

|

...,

|

||||||

|

```

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 3

|

||||||

|

|

||||||

|

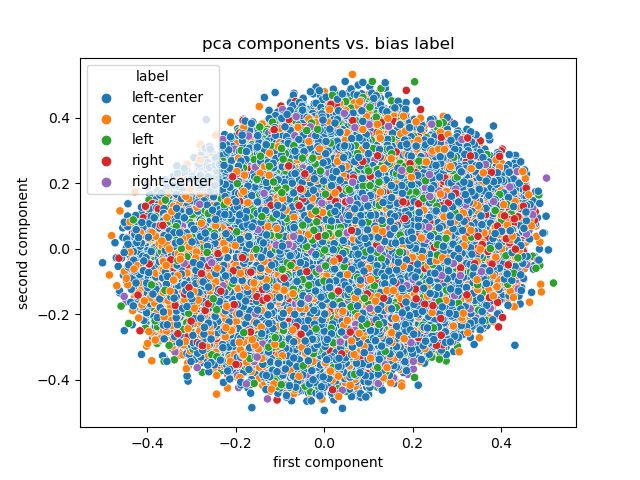

## Results

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

Note:

|

||||||

|

Not a lot of information in PCA this time.

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 3

|

||||||

|

|

||||||

|

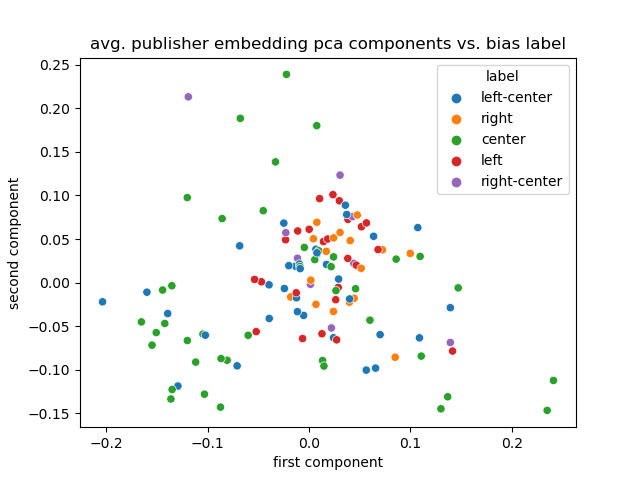

## Results

|

||||||

|

|

||||||

|

<!-- .element: class="r-stretch" -->

|

||||||

|

|

||||||

|

|

||||||

|

Note:

|

||||||

|

What about average publisher embedding?

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 3

|

||||||

|

|

||||||

|

## Results

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

Note:

|

||||||

|

Trained a kNN from sklearn.

|

||||||

|

|

||||||

|

Set aside 20% of the data as a test set.

|

||||||

|

|

||||||

|

Once trained, compared the predictions with the true on the test set.

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 3

|

||||||

|

|

||||||

|

## Discussion

|

||||||

|

|

||||||

|

- Embedding space is hard to condense with PCA. <!-- .element: class="fragment" -->

|

||||||

|

- Maybe the classifier is learning to guess 'left-ish'? <!-- .element: class="fragment" -->

|

||||||

|

|

||||||

|

===

|

||||||

|

|

||||||

|

# Experiment 4

|

||||||

|

|

||||||

|

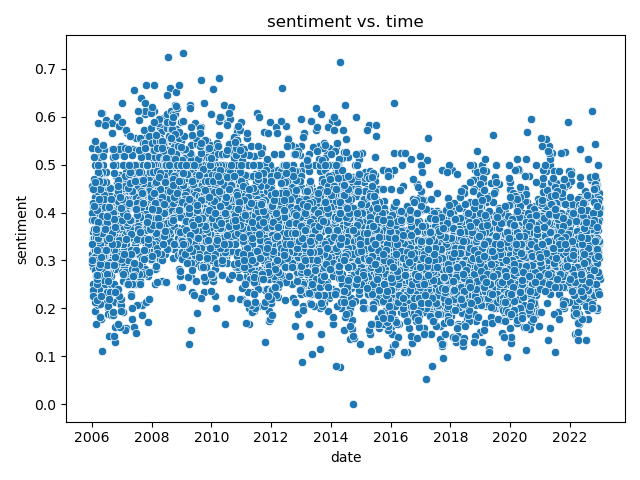

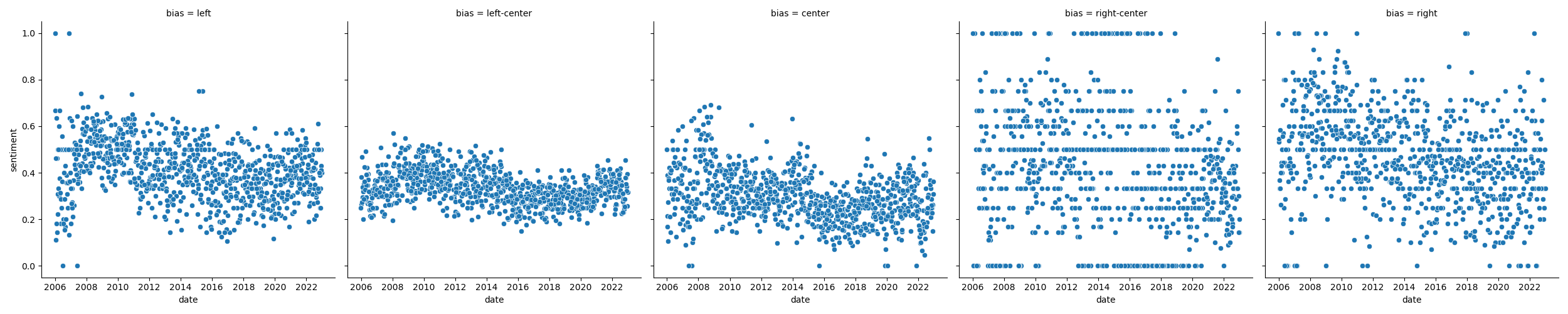

**classification** on sentiment analysis.

|

||||||

|

|

||||||

|

==

|

||||||

|

# Experiment 4

|

||||||

|

|

||||||

|

## Setup

|

||||||

|

|

||||||

|

- Use pretrained Language Classifier. <!-- .element: class="fragment" -->

|

||||||

|

- Previously: Mapped twitter posts to tokens, to embedding, to ['positive', 'negative'] labels. <!-- .element: class="fragment" -->

|

||||||

|

- Predict: rate of neutral titles decreasing over time.

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 4

|

||||||

|

|

||||||

|

## Results

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

==

|

||||||

|

# Experiment 4

|

||||||

|

|

||||||

|

## Results

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 4

|

||||||

|

|

||||||

|

## Discussion

|

||||||

|

|

||||||

|

-

|

||||||

|

|

||||||

|

===

|

||||||

|

|

||||||

|

# Experiment 5

|

||||||

|

|

||||||

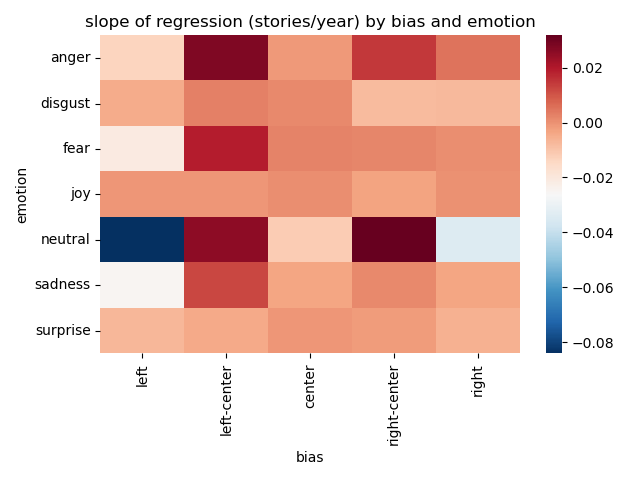

|

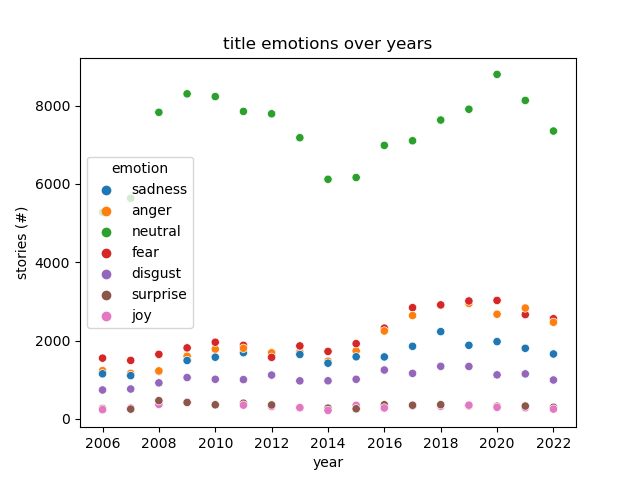

**regression** on emotional classification over time and publication.

|

||||||

|

|

||||||

|

==

|

||||||

|

# Experiment 5

|

||||||

|

|

||||||

|

## Setup

|

||||||

|

|

||||||

|

- Use pretrained language classifier. <!-- .element: class="fragment" -->

|

||||||

|

- Previously: Mapped reddit posts to tokens, to embedding, to emotion labels. <!-- .element: class="fragment" -->

|

||||||

|

- Predict: rate of neutral titles decreasing over time.

|

||||||

|

- Classify:

|

||||||

|

- features: emotional labels

|

||||||

|

- labels: bias

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 5

|

||||||

|

|

||||||

|

## Results

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 5

|

||||||

|

|

||||||

|

## Results

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 5

|

||||||

|

|

||||||

|

## Discussion

|

||||||

|

|

||||||

|

- Neutral story titles dominate the dataset. <!-- .element: class="fragment" -->

|

||||||

|

- Increase in stories published might explain most of the trend. <!-- .element: class="fragment" -->

|

||||||

|

- Far-right and far-left both became less neutral. <!-- .element: class="fragment" -->

|

||||||

|

- Left-Center and right-center became more emotional, but also neutral. <!-- .element: class="fragment" -->

|

||||||

|

- Not a lot of movement overall. <!-- .element: class="fragment" -->

|

||||||

|

|

||||||

|

===

|

||||||

|

|

||||||

|

# Experiment 6 (**TODO**)

|

||||||

|

|

||||||

|

## Setup

|

||||||

|

|

||||||

|

- Have a lot of features now. <!-- .element: class="fragment" -->

|

||||||

|

- Link PCA components.

|

||||||

|

- Embedding PCA components.

|

||||||

|

- Sentiment.

|

||||||

|

- Emotion.

|

||||||

|

- Can we predict with all of them: Bias. <!-- .element: class="fragment" -->

|

||||||

|

- End user: Is that useful? Where will I get all that at inference time? <!-- .element: class="fragment" -->

|

||||||

|

|

||||||

|

===

|

||||||

|

|

||||||

|

# Overall Limitations

|

||||||

|

|

||||||

- Many different authors under the same publisher. <!-- .element: class="fragment" -->

|

- Many different authors under the same publisher. <!-- .element: class="fragment" -->

|

||||||

- Publishers use syndication. <!-- .element: class="fragment" -->

|

- Publishers use syndication. <!-- .element: class="fragment" -->

|

||||||

|

|

|

||||||

15

src/cli.py

15

src/cli.py

|

|

@ -12,6 +12,8 @@ if __name__ == "__main__":

|

||||||

cli.add_command(scrape.parse)

|

cli.add_command(scrape.parse)

|

||||||

cli.add_command(scrape.load)

|

cli.add_command(scrape.load)

|

||||||

cli.add_command(scrape.normalize)

|

cli.add_command(scrape.normalize)

|

||||||

|

cli.add_command(scrape.create_elections_table)

|

||||||

|

|

||||||

import word

|

import word

|

||||||

# cli.add_command(word.distance)

|

# cli.add_command(word.distance)

|

||||||

# cli.add_command(word.train)

|

# cli.add_command(word.train)

|

||||||

|

|

@ -30,8 +32,11 @@ if __name__ == "__main__":

|

||||||

cli.add_command(emotion.normalize)

|

cli.add_command(emotion.normalize)

|

||||||

cli.add_command(emotion.analyze)

|

cli.add_command(emotion.analyze)

|

||||||

cli.add_command(emotion.create_table)

|

cli.add_command(emotion.create_table)

|

||||||

|

|

||||||

import sentence

|

import sentence

|

||||||

cli.add_command(sentence.embed)

|

cli.add_command(sentence.embed)

|

||||||

|

cli.add_command(sentence.create_avg_pca_table)

|

||||||

|

|

||||||

from train import main as train_main

|

from train import main as train_main

|

||||||

cli.add_command(train_main.main)

|

cli.add_command(train_main.main)

|

||||||

|

|

||||||

|

|

@ -54,4 +59,14 @@ if __name__ == "__main__":

|

||||||

import plots.classifier as plotc

|

import plots.classifier as plotc

|

||||||

cli.add_command(plotc.pca_with_classes)

|

cli.add_command(plotc.pca_with_classes)

|

||||||

|

|

||||||

|

import plots

|

||||||

|

cli.add_command(plots.sentence.sentence_pca)

|

||||||

|

cli.add_command(plots.sentence.avg_sentence_pca)

|

||||||

|

cli.add_command(plots.emotion.emotion_over_time)

|

||||||

|

cli.add_command(plots.emotion.emotion_regression)

|

||||||

|

|

||||||

|

cli.add_command(plots.sentiment.over_time)

|

||||||

|

cli.add_command(plots.sentiment.bias_over_time)

|

||||||

|

|

||||||

|

|

||||||

cli()

|

cli()

|

||||||

|

|

|

||||||

|

|

@ -335,3 +335,92 @@ def another_norm():

|

||||||

on sv2.id = s.id

|

on sv2.id = s.id

|

||||||

limit 5

|

limit 5

|

||||||

""")

|

""")

|

||||||

|

|

||||||

|

@click.command('data:create-election-table')

|

||||||

|

def create_elections_table():

|

||||||

|

df = pd.read_csv(data_dir() / 'election_dates.csv', sep="|")

|

||||||

|

df['date'] = pd.to_datetime(df.date)

|

||||||

|

|

||||||

|

DB = connect()

|

||||||

|

DB.query("""

|

||||||

|

CREATE OR REPLACE TABLE election_dates AS

|

||||||

|

SELECT

|

||||||

|

row_number() over() as id

|

||||||

|

,type

|

||||||

|

,date

|

||||||

|

FROM df

|

||||||

|

""")

|

||||||

|

|

||||||

|

DB.query("""

|

||||||

|

CREATE OR REPLACE TABLE election_distance AS

|

||||||

|

WITH cte as (

|

||||||

|

SELECT

|

||||||

|

day(e.date - s.published_at) as days_away

|

||||||

|

,e.id as election_id

|

||||||

|

,e.date as election_date

|

||||||

|

,s.published_at as publish_date

|

||||||

|

FROM (

|

||||||

|

SELECT

|

||||||

|

DISTINCT

|

||||||

|

published_at

|

||||||

|

FROM top.stories

|

||||||

|

) s

|

||||||

|

CROSS JOIN election_dates e

|

||||||

|

) , windowed as (

|

||||||

|

SELECT

|

||||||

|

row_number() over(partition by publish_date order by abs(days_away) asc) as rn

|

||||||

|

,days_away

|

||||||

|

,publish_date

|

||||||

|

,election_date

|

||||||

|

,election_id

|

||||||

|

FROM cte

|

||||||

|

)

|

||||||

|

SELECT

|

||||||

|

days_away

|

||||||

|

,publish_date

|

||||||

|

,election_date

|

||||||

|

,election_id

|

||||||

|

FROM windowed

|

||||||

|

WHERE rn = 1

|

||||||

|

""")

|

||||||

|

|

||||||

|

DB.close()

|

||||||

|

|

||||||

|

@click.command('scrape:create-denorm')

|

||||||

|

def create_denorm():

|

||||||

|

|

||||||

|

DB = connect()

|

||||||

|

DB.sql("create schema denorm")

|

||||||

|

DB.sql("""

|

||||||

|

CREATE OR REPLACE TABLE denorm.stories AS

|

||||||

|

SELECT

|

||||||

|

s.id as story_id

|

||||||

|

,s.title

|

||||||

|

,s.url

|

||||||

|

,s.published_at

|

||||||

|

,s.author

|

||||||

|

,p.name as publisher

|

||||||

|

,p.tld as tld

|

||||||

|

,sent.class_id as sentiment

|

||||||

|

,d.days_away as election_distance

|

||||||

|

,b.ordinal as bias

|

||||||

|

,pca.first as link_1

|

||||||

|

,pca.second as link_2

|

||||||

|

,e.emotion_id as emotion

|

||||||

|

FROM top.stories s

|

||||||

|

JOIN top.publishers p

|

||||||

|

ON p.id = s.publisher_id

|

||||||

|

JOIN top.story_sentiments sent

|

||||||

|

ON s.id = sent.story_id

|

||||||

|

JOIN election_distance d

|

||||||

|

ON d.election_date = s.published_at

|

||||||

|

JOIN publisher_bias pb

|

||||||

|

ON pb.publisher_id = p.id

|

||||||

|

JOIN bias_ratings b

|

||||||

|

ON b.id = pb.bias_id

|

||||||

|

JOIN top.publisher_pca_onehot pca

|

||||||

|

ON pca.publisher_id = p.id

|

||||||

|

JOIN story_emotions e

|

||||||

|

ON e.story_id = s.id

|

||||||

|

""")

|

||||||

|

DB.close()

|

||||||

|

|

|

||||||

|

|

@ -379,24 +379,34 @@ def debug():

|

||||||

|

|

||||||

def another():

|

def another():

|

||||||

DB = connect()

|

DB = connect()

|

||||||

|

|

||||||

DB.sql("""

|

DB.sql("""

|

||||||

select

|

select

|

||||||

*

|

*

|

||||||

from emotions

|

from emotions

|

||||||

""")

|

""")

|

||||||

|

|

||||||

emotions = DB.sql("""

|

DB.sql("""

|

||||||

select

|

select

|

||||||

year(s.published_at) as year

|

*

|

||||||

,se.label as emotion

|

from story_emotions

|

||||||

,count(1) as stories

|

""")

|

||||||

from stories s

|

|

||||||

join story_emotions se

|

emotions = DB.sql("""

|

||||||

on s.id = se.story_id

|

SELECT

|

||||||

group by

|

YEAR(s.published_at) AS year

|

||||||

year(s.published_at)

|

,e.label AS emotion

|

||||||

,se.label

|

,count(1) AS stories

|

||||||

|

FROM stories s

|

||||||

|

JOIN story_emotions se

|

||||||

|

ON s.id = se.story_id

|

||||||

|

JOIN emotions e

|

||||||

|

ON e.id = se.emotion_id

|

||||||

|

GROUP by

|

||||||

|

YEAR(s.published_at)

|

||||||

|

,e.label

|

||||||

""").df()

|

""").df()

|

||||||

|

emotions

|

||||||

|

|

||||||

sns.scatterplot(x=emotions['year'], y=emotions['stories'], hue=emotions['emotion'])

|

sns.scatterplot(x=emotions['year'], y=emotions['stories'], hue=emotions['emotion'])

|

||||||

plt.show()

|

plt.show()

|

||||||

|

|

|

||||||

|

|

@ -0,0 +1,9 @@

|

||||||

|

import plots.sentence

|

||||||

|

import plots.emotion

|

||||||

|

import plots.sentiment

|

||||||

|

|

||||||

|

__all__ = [

|

||||||

|

'sentence'

|

||||||

|

'emotion',

|

||||||

|

'sentiment',

|

||||||

|

]

|

||||||

|

|

@ -0,0 +1,117 @@

|

||||||

|

import click

|

||||||

|

from data.main import connect

|

||||||

|

import os

|

||||||

|

from pathlib import Path

|

||||||

|

import seaborn as sns

|

||||||

|

import matplotlib.pyplot as plt

|

||||||

|

import numpy as np

|

||||||

|

import pandas as pd

|

||||||

|

|

||||||

|

out_path = Path(os.getenv('DATA_MINING_DOC_DIR')) / 'figures'

|

||||||

|

|

||||||

|

@click.command('plot:emotion-over-time')

|

||||||

|

def emotion_over_time():

|

||||||

|

filename = "emotion_over_time.png"

|

||||||

|

DB = connect()

|

||||||

|

|

||||||

|

emotions = DB.sql("""

|

||||||

|

SELECT

|

||||||

|

date_trunc('year', s.published_at) AS year

|

||||||

|

,e.label AS emotion

|

||||||

|

,count(1) AS stories

|

||||||

|

FROM top.stories s

|

||||||

|

JOIN story_emotions se

|

||||||

|

ON s.id = se.story_id

|

||||||

|

JOIN emotions e

|

||||||

|

ON e.id = se.emotion_id

|

||||||

|

GROUP by

|

||||||

|

date_trunc('year', s.published_at)

|

||||||

|

,e.label

|

||||||

|

""").df()

|

||||||

|

DB.close()

|

||||||

|

|

||||||

|

ax = sns.scatterplot(x=emotions['year'], y=emotions['stories'], hue=emotions['emotion'])

|

||||||

|

ax.set(title="title emotions over years", xlabel="year", ylabel="stories (#)")

|

||||||

|

plt.savefig(out_path / filename)

|

||||||

|

print(f"saved: {filename}")

|

||||||

|

|

||||||

|

@click.command('plot:emotion-regression')

|

||||||

|

def emotion_regression():

|

||||||

|

from sklearn import linear_model

|

||||||

|

from sklearn.model_selection import train_test_split

|

||||||

|

from sklearn.metrics import ConfusionMatrixDisplay

|

||||||

|

|

||||||

|

filename = "emotion_regression.png"

|

||||||

|

|

||||||

|

|

||||||

|

DB = connect()

|

||||||

|

emotions = DB.query("""

|

||||||

|

SELECT

|

||||||

|

label

|

||||||

|

FROM emotions e

|

||||||

|

""").df()['label'].to_list()

|

||||||

|

DB.close()

|

||||||

|

|

||||||

|

DB = connect()

|

||||||

|

df = DB.sql(f"""

|

||||||

|

SELECT

|

||||||

|

epoch(date_trunc('yearweek', s.published_at)) AS date

|

||||||

|

,e.id AS emotion_id

|

||||||

|

,p.id as publisher_id

|

||||||

|

,count(1) AS stories

|

||||||

|

FROM top.stories s

|

||||||

|

JOIN top.publishers p

|

||||||

|

ON p.id = s.publisher_id

|

||||||

|

JOIN story_emotions se

|

||||||

|

ON s.id = se.story_id

|

||||||

|

JOIN emotions e

|

||||||

|

ON e.id = se.emotion_id

|

||||||

|

GROUP by

|

||||||

|

epoch(date_trunc('yearweek', s.published_at))

|

||||||

|

,p.id

|

||||||

|

,e.id

|

||||||

|

""").df()

|

||||||

|

DB.close()

|

||||||

|

|

||||||

|

results = []

|

||||||

|

for (emotion_id, publisher_id), group in df.groupby(['emotion_id', 'publisher_id']):

|

||||||

|

model = linear_model.LinearRegression()

|

||||||

|

x = group['date'].to_numpy().reshape(-1, 1)

|

||||||

|

y = group['stories'].to_numpy()

|

||||||

|

model.fit(x, y)

|

||||||

|

per_year = model.coef_.item() * 60 * 60 * 24 * 365

|

||||||

|

results.append({'emotion_id' : emotion_id, 'publisher_id':publisher_id, 'per_year' : per_year})

|

||||||

|

results = pd.DataFrame(results)

|

||||||

|

|

||||||

|

DB = connect()

|

||||||

|

out = DB.query("""

|

||||||

|

SELECT

|

||||||

|

e.label as emotion

|

||||||

|

--,p.tld

|

||||||

|

,avg(results.per_year) as avg_reg_coef

|

||||||

|

,b.ordinal

|

||||||

|

FROM results

|

||||||

|

JOIN emotions e

|

||||||

|

ON e.id = results.emotion_id

|

||||||

|

JOIN top.publishers p

|

||||||

|

ON p.id = results.publisher_id

|

||||||

|

JOIN publisher_bias pb

|

||||||

|

ON pb.publisher_id = results.publisher_id

|

||||||

|

JOIN bias_ratings b

|

||||||

|

ON b.id = pb.bias_id

|

||||||

|

GROUP BY

|

||||||

|

e.label

|

||||||

|

,b.ordinal

|

||||||

|

""").df()

|

||||||

|

DB.close()

|

||||||

|

pivot = out.pivot(index=['emotion'], columns=['ordinal'], values=['avg_reg_coef'])

|

||||||

|

|

||||||

|

ax = sns.heatmap(pivot, cmap='RdBu_r')

|

||||||

|

ticklabels = ['left', 'left-center', 'center', 'right-center', 'right']

|

||||||

|

ax.set(title="slope of regression (stories/year) by bias and emotion"

|

||||||

|

,xticklabels=ticklabels

|

||||||

|

,xlabel="bias"

|

||||||

|

,ylabel="emotion")

|

||||||

|

plt.tight_layout()

|

||||||

|

plt.savefig(out_path / filename)

|

||||||

|

print(f"saved: {filename}")

|

||||||

|

|

@ -0,0 +1,111 @@

|

||||||

|

import click

|

||||||

|

from data.main import connect

|

||||||

|

import os

|

||||||

|

from pathlib import Path

|

||||||

|

import seaborn as sns

|

||||||

|

import matplotlib.pyplot as plt

|

||||||

|

import numpy as np

|

||||||

|

import pandas as pd

|

||||||

|

|

||||||

|

out_path = Path(os.getenv('DATA_MINING_DOC_DIR')) / 'figures'

|

||||||

|

data_path = Path(os.getenv('DATA_MINING_DATA_DIR'))

|

||||||

|

|

||||||

|

@click.command('plot:sentence-pca')

|

||||||

|

def sentence_pca():

|

||||||

|

filename = "embedding_sentence_pca.png"

|

||||||

|

DB = connect()

|

||||||

|

|

||||||

|

data = DB.query("""

|

||||||

|

SELECT

|

||||||

|

pca.first

|

||||||

|

,pca.second

|

||||||

|

,b.bias as label

|

||||||

|

FROM top.story_embeddings_pca pca

|

||||||

|

JOIN top.stories s

|

||||||

|

ON s.id = pca.story_id

|

||||||

|

JOIN top.publisher_bias pb

|

||||||

|

ON pb.publisher_id = s.publisher_id

|

||||||

|

JOIN bias_ratings b

|

||||||

|

ON b.id = pb.bias_id

|

||||||

|

""").df()

|

||||||

|

DB.close()

|

||||||

|

|

||||||

|

ax = sns.scatterplot(x=data['first'], y=data['second'], hue=data['label'])

|

||||||

|

ax.set(title="pca components vs. bias label", xlabel="first component", ylabel="second component")

|

||||||

|

plt.savefig(out_path / filename)

|

||||||

|

|

||||||

|

@click.command('plot:avg-sentence-pca')

|

||||||

|

def avg_sentence_pca():

|

||||||

|

filename = "avg_embedding_sentence_pca.png"

|

||||||

|

DB = connect()

|

||||||

|

|

||||||

|

data = DB.query("""

|

||||||

|

SELECT

|

||||||

|

pca.first

|

||||||

|

,pca.second

|

||||||

|

,p.tld

|

||||||

|

,b.bias as label

|

||||||

|

FROM top.publisher_embeddings_pca pca

|

||||||

|

JOIN top.publishers p

|

||||||

|

ON p.id = pca.publisher_id

|

||||||

|

JOIN top.publisher_bias pb

|

||||||

|

ON pb.publisher_id = p.id

|

||||||

|

JOIN bias_ratings b

|

||||||

|

ON b.id = pb.bias_id

|

||||||

|

""").df()

|

||||||

|

DB.close()

|

||||||

|

|

||||||

|

ax = sns.scatterplot(x=data['first'], y=data['second'], hue=data['label'])

|

||||||

|

ax.set(title="avg. publisher embedding pca components vs. bias label", xlabel="first component", ylabel="second component")

|

||||||

|

plt.savefig(out_path / filename)

|

||||||

|

|

||||||

|

@click.command('plot:sentence-confusion')

|

||||||

|

def sentence_confusion():

|

||||||

|

from sklearn.model_selection import train_test_split

|

||||||

|

from sklearn.neighbors import KNeighborsClassifier

|

||||||

|

from sklearn.metrics import ConfusionMatrixDisplay

|

||||||

|

|

||||||

|

filename = "sentence_confusion.png"

|

||||||

|

|

||||||

|

embeddings = np.load(data_path / 'embeddings.npy')

|

||||||

|

embedding_ids = np.load(data_path / 'embedding_ids.npy')

|

||||||

|

ids = pd.DataFrame(embedding_ids, columns=['story_id']).reset_index()

|

||||||

|

|

||||||

|

DB = connect()

|

||||||

|

data = DB.query("""

|

||||||

|

SELECT

|

||||||

|

ids.index

|

||||||

|

,s.id

|

||||||

|

,b.ordinal

|

||||||

|

FROM ids

|

||||||

|

JOIN top.stories s

|

||||||

|

ON ids.story_id = s.id

|

||||||

|

JOIN top.publisher_bias pb

|

||||||

|

ON pb.publisher_id = s.publisher_id

|

||||||

|

JOIN bias_ratings b

|

||||||

|

ON b.id = pb.bias_id

|

||||||

|

""").df()

|

||||||

|

pub = DB.query("""

|

||||||

|

SELECT

|

||||||

|

*

|

||||||

|

FROM top.publishers

|

||||||

|

""").df()

|

||||||

|

DB.close()

|

||||||

|

|

||||||

|

train, test = train_test_split(data)

|

||||||

|

train_x, train_y = embeddings[train['index']], train['ordinal']

|

||||||

|

test_x, test_y = embeddings[test['index']], test['ordinal']

|

||||||

|

|

||||||

|

model = KNeighborsClassifier(n_neighbors=5)

|

||||||

|

model.fit(train_x, train_y)

|

||||||

|

pred = model.predict(test_x)

|

||||||

|

|

||||||

|

|

||||||

|

fig, ax = plt.subplots(figsize=(10, 5))

|

||||||

|

ConfusionMatrixDisplay.from_predictions(test_y, pred, ax=ax)

|

||||||

|

ticklabels = ['left', 'left-center', 'center', 'right-center', 'right']

|

||||||

|

ax.set(title="confusion matrix for kNN classifier on test data.", xticklabels=ticklabels, yticklabels=ticklabels)

|

||||||

|

plt.savefig(out_path / filename)

|

||||||

|

plt.close()

|

||||||

|

|

||||||

|

print(f"saved plot: {filename}")

|

||||||

|

|

@ -0,0 +1,60 @@

|

||||||

|

import click

|

||||||

|

from data.main import connect

|

||||||

|

import os

|

||||||

|

from pathlib import Path

|

||||||

|

import seaborn as sns

|

||||||

|

import matplotlib.pyplot as plt

|

||||||

|

import numpy as np

|

||||||

|

import pandas as pd

|

||||||

|

|

||||||

|

out_path = Path(os.getenv('DATA_MINING_DOC_DIR')) / 'figures'

|

||||||

|

@click.command('plot:sentiment-over-time')

|

||||||

|

def over_time():

|

||||||

|

filename = "sentiment_over_time.png"

|

||||||

|

|

||||||

|

DB = connect()

|

||||||

|

data = DB.sql("""

|

||||||

|

SELECT

|

||||||

|

avg(sent.class_id) as sentiment

|

||||||

|

,s.published_at as date

|

||||||

|

FROM top.story_sentiments sent

|

||||||

|

JOIN top.stories s

|

||||||

|

ON s.id = sent.story_id

|

||||||

|

GROUP BY

|

||||||

|

s.published_at

|

||||||

|

""").df()

|

||||||

|

DB.close()

|

||||||

|

|

||||||

|

ax = sns.scatterplot(x=data['date'], y=data['sentiment'])

|

||||||

|

ax.set(title="sentiment vs. time")

|

||||||

|

plt.tight_layout()

|

||||||

|

plt.savefig(out_path / filename)

|

||||||

|

print(f"saved: {filename}")

|

||||||

|

@click.command('plot:bias-vs-sentiment-over-time')

|

||||||

|

def bias_over_time():

|

||||||

|

filename = "bias_vs_sentiment_over_time.png"

|

||||||

|

|

||||||

|

DB = connect()

|

||||||

|

data = DB.sql("""

|

||||||

|

SELECT

|

||||||

|

avg(sent.class_id) as sentiment

|

||||||

|

,s.published_at as date

|

||||||

|

,b.id as bias_id

|

||||||

|

FROM top.story_sentiments sent

|

||||||

|

JOIN top.stories s

|

||||||

|

ON s.id = sent.story_id

|

||||||

|

JOIN publisher_bias pb

|

||||||

|

ON pb.publisher_id = s.publisher_id

|

||||||

|

JOIN bias_ratings b

|

||||||

|

ON b.id = pb.bias_id

|

||||||

|

GROUP BY

|

||||||

|

s.published_at

|

||||||

|

,b.id

|

||||||

|

""").df()

|

||||||

|

DB.close()

|

||||||

|

|

||||||

|

ax = sns.relplot(x=data['date'], y=data['sentiment'], col=data['bias_id'])

|

||||||

|

ax.set(title="sentiment vs. time grouped by bias")

|

||||||

|

plt.tight_layout()

|

||||||

|

plt.savefig(out_path / filename)

|

||||||

|

print(f"saved: {filename}")

|

||||||

|

|

@ -72,16 +72,71 @@ def embed(chunks):

|

||||||

print(f"ids saved: {save_to}")

|

print(f"ids saved: {save_to}")

|

||||||

|

|

||||||

|

|

||||||

@click.command('sentence:create-pca-table')

|

@click.command('sentence:create-avg-pca-table')

|

||||||

def create_table():

|

def create_avg_pca_table():

|

||||||

from sklearn import linear_model

|

from sklearn.decomposition import PCA

|

||||||

data_path = Path(os.getenv('DATA_MINING_DATA_DIR'))

|

data_path = Path(os.getenv('DATA_MINING_DATA_DIR'))

|

||||||

|

|

||||||

|

|

||||||

embeddings = np.load(data_path / 'embeddings.npy')

|

embeddings = np.load(data_path / 'embeddings.npy')

|

||||||

embedding_ids = np.load(data_path / 'embedding_ids.npy')

|

embedding_ids = np.load(data_path / 'embedding_ids.npy')

|

||||||

ids = pd.DataFrame(embedding_ids, columns=['story_id']).reset_index()

|

ids = pd.DataFrame(embedding_ids, columns=['story_id']).reset_index()

|

||||||

DB = connect()

|

|

||||||

|

|

||||||

|

DB = connect()

|

||||||

|

data = DB.query("""

|

||||||

|

SELECT

|

||||||

|

ids.index

|

||||||

|

,s.id

|

||||||

|

,s.publisher_id

|

||||||

|

,b.ordinal

|

||||||

|

FROM ids

|

||||||

|

JOIN top.stories s

|

||||||

|

ON ids.story_id = s.id

|

||||||

|

JOIN top.publisher_bias pb

|

||||||

|

ON pb.publisher_id = s.publisher_id

|

||||||

|

JOIN bias_ratings b

|

||||||

|

ON b.id = pb.bias_id

|

||||||

|

""").df()

|

||||||

|

DB.close()

|

||||||

|

|

||||||

|

results = []

|

||||||

|

for publisher_id, group in data.groupby(['publisher_id']):

|

||||||

|

avg = embeddings[group['index']].mean(axis=0)

|

||||||

|

ordinal = group['ordinal'].iloc[0]

|

||||||

|

results.append({'publisher_id' : publisher_id, 'embedding' : avg, 'ordinal' : ordinal})

|

||||||

|

results = pd.DataFrame(results)

|

||||||

|

|

||||||

|

x = np.stack(results['embedding'])

|

||||||

|

y = results['ordinal']

|

||||||

|

|

||||||

|

model = PCA(n_components=2)

|

||||||

|

pred = model.fit_transform(x)

|

||||||

|

results['first'] = pred[:, 0]

|

||||||

|

results['second'] = pred[:, 1]

|

||||||

|

|

||||||

|

table_name = "top.publisher_embeddings_pca"

|

||||||

|

DB = connect()

|

||||||

|

DB.query(f"""

|

||||||

|

CREATE OR REPLACE TABLE {table_name} AS

|

||||||

|

SELECT

|

||||||

|

results.publisher_id as publisher_id

|

||||||

|

,results.first as first

|

||||||

|

,results.second as second

|

||||||

|

FROM results

|

||||||

|

""")

|

||||||

|

DB.close()

|

||||||

|

print(f"created {table_name}")

|

||||||

|

|

||||||

|

|

||||||

|

@click.command('sentence:create-pca-table')

|

||||||

|

def create_pca_table():

|

||||||

|

from sklearn.decomposition import PCA

|

||||||

|

data_path = Path(os.getenv('DATA_MINING_DATA_DIR'))

|

||||||

|

|

||||||

|

embeddings = np.load(data_path / 'embeddings.npy')

|

||||||

|

embedding_ids = np.load(data_path / 'embedding_ids.npy')

|

||||||

|

|

||||||

|

DB = connect()

|

||||||

data = DB.query("""

|

data = DB.query("""

|

||||||

SELECT

|

SELECT

|

||||||

ids.index

|

ids.index

|

||||||

|

|

@ -95,19 +150,38 @@ def create_table():

|

||||||

JOIN bias_ratings b

|

JOIN bias_ratings b

|

||||||

ON b.id = pb.bias_id

|

ON b.id = pb.bias_id

|

||||||

""").df()

|

""").df()

|

||||||

|

pub = DB.query("""

|

||||||

|

SELECT

|

||||||

|

*

|

||||||

|

FROM top.publishers

|

||||||

|

""").df()

|

||||||

|

DB.close()

|

||||||

|

|

||||||

x = embeddings[data['index']]

|

x = embeddings[data['index']]

|

||||||

y = data['ordinal'].to_numpy().reshape(-1, 1)

|

y = data['ordinal'].to_numpy().reshape(-1, 1)

|

||||||

|

model = PCA(n_components=2)

|

||||||

|

pred = model.fit_transform(x)

|

||||||

|

data['first'] = pred[:, 0]

|

||||||

|

data['second'] = pred[:, 1]

|

||||||

|

|

||||||

reg = linear_model.LinearRegression()

|

table_name = f"top.story_embeddings_pca"

|

||||||

|

|

||||||

reg.fit(x, y)

|

DB = connect()

|

||||||

|

DB.query(f"""

|

||||||

reg.coef_.shape

|

CREATE OR REPLACE TABLE {table_name} AS

|

||||||

|

SELECT

|

||||||

|

data.id as story_id

|

||||||

|

,data.first as first

|

||||||

|

,data.second as second

|

||||||

|

FROM data

|

||||||

|

""")

|

||||||

|

DB.close()

|

||||||

|

print(f"created {table_name}")

|

||||||

|

|

||||||

@click.command('sentence:create-svm-table')

|

@click.command('sentence:create-svm-table')

|

||||||

def create_svm_table():

|

def create_svm_table():

|

||||||

from sklearn import svm

|

from sklearn import svm

|

||||||

|

from sklearn.linear_model import SGDClassifier

|

||||||

data_path = Path(os.getenv('DATA_MINING_DATA_DIR'))

|

data_path = Path(os.getenv('DATA_MINING_DATA_DIR'))

|

||||||

|

|

||||||

embeddings = np.load(data_path / 'embeddings.npy')

|

embeddings = np.load(data_path / 'embeddings.npy')

|

||||||

|

|

@ -133,6 +207,8 @@ def create_svm_table():

|

||||||

#y = data['ordinal'].to_numpy().reshape(-1, 1)

|

#y = data['ordinal'].to_numpy().reshape(-1, 1)

|

||||||

y = data['ordinal']

|

y = data['ordinal']

|

||||||

|

|

||||||

clf = svm.SVC()

|

model = SGDClassifier()

|

||||||

pred = clf.fit(x, y)

|

pred = model.fit(x, y)

|

||||||

|

data['pred'] = pred.predict(x)

|

||||||

|

data

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -0,0 +1,86 @@

|

||||||

|

from transformers import DistilBertTokenizer, DistilBertForSequenceClassification

|

||||||

|

import torch

|

||||||

|

import torch.nn.functional as F

|

||||||

|

from data import connect, data_dir

|

||||||

|

import numpy as np

|

||||||

|

from tqdm import tqdm

|

||||||

|

import click

|

||||||

|

|

||||||

|

@click.option('-c', '--chunks', type=int, default=500, show_default=True)

|

||||||

|

@click.command("sentiment:extract")

|

||||||

|

def extract(chunks):

|

||||||

|

device = 'cuda' if torch.cuda.is_available() else 'cpu'

|

||||||

|

chunks = 1000

|

||||||

|

|

||||||

|

# Load model from HuggingFace Hub

|

||||||

|

tokenizer = DistilBertTokenizer.from_pretrained("distilbert-base-uncased")

|

||||||

|

model = DistilBertForSequenceClassification.from_pretrained("distilbert-base-uncased")

|

||||||

|

model = model.to(device)

|

||||||

|

|

||||||

|

|

||||||

|

# load data

|

||||||

|

DB = connect()

|

||||||

|

table = DB.sql("""

|

||||||

|

select

|

||||||

|

id

|

||||||

|

,title

|

||||||

|

from stories

|

||||||

|

order by id desc

|

||||||

|

""").df()

|

||||||

|

DB.close()

|

||||||

|

|

||||||

|

# normalize text

|

||||||

|

table['title'] = table['title'].str.normalize('NFKD').str.encode('ascii', errors='ignore').str.decode('utf-8')

|

||||||

|

|

||||||

|

|

||||||

|

chunked = np.array_split(table, chunks)

|

||||||

|

|

||||||

|

# generate embeddings from list of titles

|

||||||

|

iterator = tqdm(chunked, 'embedding')

|

||||||

|

sentiments = []

|

||||||

|

story_ids = []

|

||||||

|

for _, chunk in enumerate(iterator):

|

||||||

|

sentences = chunk['title'].tolist()

|

||||||

|

ids = chunk['id'].tolist()

|

||||||

|

# Tokenize sentences

|

||||||

|

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

|

||||||

|

# Compute token embeddings

|

||||||

|

with torch.no_grad():

|

||||||

|

logits = model(**encoded_input.to(device)).logits

|

||||||

|

sentiment = logits.argmax(axis=1).tolist()

|

||||||

|

sentiments.append(sentiment)

|

||||||

|

story_ids.append(ids)

|

||||||

|

|

||||||

|

sentiments = np.concatenate(sentiments)

|

||||||

|

story_ids = np.concatenate(story_ids)

|

||||||

|

|

||||||

|

# save embeddings

|

||||||

|

save_to = data_dir() / 'sentiment.npy'

|

||||||

|

np.save(save_to, sentiments)

|

||||||

|

print(f"sentiments saved: {save_to}")

|

||||||

|

|

||||||

|

# save ids

|

||||||

|

save_to = data_dir() / 'sentiment_ids.npy'

|

||||||

|

np.save(save_to, story_ids)

|

||||||

|

print(f"ids saved: {save_to}")

|

||||||

|

|

||||||

|

@click.command('sentiment:load')

|

||||||

|

def load():

|

||||||

|

|

||||||

|

DB = connect()

|

||||||

|

sentiments = np.load(data_dir() / 'sentiment.npy')

|

||||||

|

story_ids = np.load(data_dir() / 'sentiment_ids.npy')

|

||||||

|

data = pd.DataFrame(story_ids, columns=['story_id']).reset_index()

|

||||||

|

data['sentiment_id'] = sentiments

|

||||||

|

|

||||||

|

DB.query("""

|

||||||

|

CREATE OR REPLACE TABLE top.story_sentiments AS

|

||||||

|

SELECT

|

||||||

|

data.story_id

|

||||||

|

,data.sentiment_id as class_id

|

||||||

|

,CASE WHEN data.sentiment_id = 1 THEN 'positive' ELSE 'negative' end as label

|

||||||

|

FROM data

|

||||||

|

JOIN top.stories s

|

||||||

|

ON s.id = data.story_id

|

||||||

|

""")

|

||||||

|

DB.close()

|

||||||

Loading…

Reference in New Issue