_model: slides

---

title: CSCI 577 - Data Mining

---

body:

# Political Polarization

Matt Jensen

===

# Hypothesis

Political polarization is rising, and news articles are a proxy measure.

==

# Is this reasonable?

==

# Why is polarization rising?

Not my job, but there's research[ref](#references) to support it

==

# Sub-hypothesis

- The polarization increases near elections.

- The polarization is not evenly distributed across publishers.

- The polarization is not evenly distributed across political specturm.

==

# Sub-sub-hypothesis

- Similarly polarized publishers link to each other.

- 'Mainstream' media uses more neutral titles.

- Highly polarized publications don't last as long.

===

# Data Source(s)

memeorandum.com

allsides.com

huggingface.com

===

===

# memeorandum.com

- News aggregation site.

- Was really famous before Google News.

- Still aggregates sites today.

==

# Why Memeorandum?

- Behavioral: I only read titles sometimes. (doom scrolling).

- Behavioral: It's my source of news (with sister site TechMeme.com).

- Convenient: most publishers block bots.

- Convenient: dead simple html to parse.

- Archival: all headlines from 2006 forward.

- Archival: automated, not editorialized.

===

===

# AllSides.com

- Rates news publications as left, center or right.

- Ratings combine:

- blind bias surveys.

- editorial reviews.

- third party research.

- community voting.

- Originally scraped website, but direct access eventually.

==

# Why AllSides?

- Behavioral: One of the first google results on bias apis.

- Convenient: Ordinal ratings [-2: very left, 2: very right].

- Convenient: Easy format.

- Archival: Covers 1400 publishers.

===

===

# HuggingFace.com

- Deep Learning library.

- Lots of pretrained models.

- Easy, off the shelf word/sentence embeddings and text classification models.

==

# Why HuggingFace?

- Behavioral: Language Models are HOT right now.

- Behavioral: The dataset needed more features.

- Convenient: Literally 5 lines of python.

- Convenient: Testing different model performance was easy.

- Archival: Lots of pretrained classification tasks.

===

# Data Structures

Stories

- Top level stories.

- title.

- publisher.

- author.

- Related discussion.

- publisher.

- uses 'parent' story as a source.

- Stream of stories (changes constantly).

==

# Data Structures

Bias

- Per publisher.

- name.

- label.

- agree/disagree vote by community.

- Name could be semi-automatically joined to stories.

==

# Data Structures

Embeddings

- Per story title.

- sentence embedding (n, 384).

- sentiment classification (n, 1).

- emotional classification (n, 1).

- ~ 1 hour of inference time to map story titles and descriptions.

===

# Data Collection

==

# Data Collection

Story Scraper (simplified)

```python

day = timedelta(days=1)

cur = date(2005, 10, 1)

end = date.today()

while cur <= end:

cur = cur + day

save_as = output_dir / f"{cur.strftime('%y-%m-%d')}.html"

url = f"https://www.memeorandum.com/{cur.strftime('%y%m%d')}/h2000"

r = requests.get(url)

with open(save_as, 'w') as f:

f.write(r.text)

```

==

# Data Collection

Bias Scraper (hard)

```python

...

bias_html = DATA_DIR / 'allsides.html'

parser = etree.HTMLParser()

tree = etree.parse(str(bias_html), parser)

root = tree.getroot()

rows = root.xpath('//table[contains(@class,"views-table")]/tbody/tr')

ratings = []

for row in rows:

rating = dict()

...

```

==

# Data Collection

Bias Scraper (easy)

==

# Data Collection

Embeddings (easy)

```python

# table = ...

tokenizer = AutoTokenizer.from_pretrained("roberta-base")

model = AutoModel.from_pretrained("roberta-base")

for chunk in table:

tokens = tokenizer(chunk, add_special_tokens = True, truncation = True, padding = "max_length", max_length=92, return_attention_mask = True, return_tensors = "pt")

outputs = model(**tokens)

embeddings = outputs.last_hidden_state.detach().numpy()

...

```

==

# Data Collection

Classification Embeddings (medium)

```python

...

outputs = model(**tokens)[0].detach().numpy()

scores = 1 / (1 + np.exp(-outputs)) # Sigmoid

class_ids = np.argmax(scores, axis=1)

for i, class_id in enumerate(class_ids):

results.append({"story_id": ids[i], "label" : model.config.id2label[class_id]})

...

```

===

# Data Selection

==

# Data Selection

Stories

- Clip the first and last full year of stories.

- Remove duplicate stories (big stories span multiple days).

==

# Data Selection

Publishers

- Combine subdomains of stories.

- blog.washingtonpost.com and washingtonpost.com are considered the same publisher.

- This could be bad. For example: opinion.wsj.com != wsj.com.

==

# Data Selection

Links

- Select only stories with publishers whose story had been a 'parent' ('original publishers').

- Eliminates small blogs and non-original news.

- Eliminate publishers without links to original publishers.

- Eliminate silo'ed publications.

- Link matrix is square and low'ish dimensional.

==

# Data Selection

Bias

- Keep all ratings, even ones with low agree/disagree ratio.

- Join datasets on publisher name.

- Not automatic (look up Named Entity Recognition).

- Started with 'jaro winkler similarity' then manually from there.

- Use numeric values

- [left: -2, left-center: -1, ...]

===

# Descriptive Stats

Raw

| metric | value |

|:------------------|--------:|

| total stories | 299714 |

| total related | 960111 |

| publishers | 7031 |

| authors | 34346 |

| max year | 2023 |

| min year | 2005 |

| top level domains | 7063 |

==

# Descriptive Stats

Stories Per Publisher

==

# Descriptive Stats

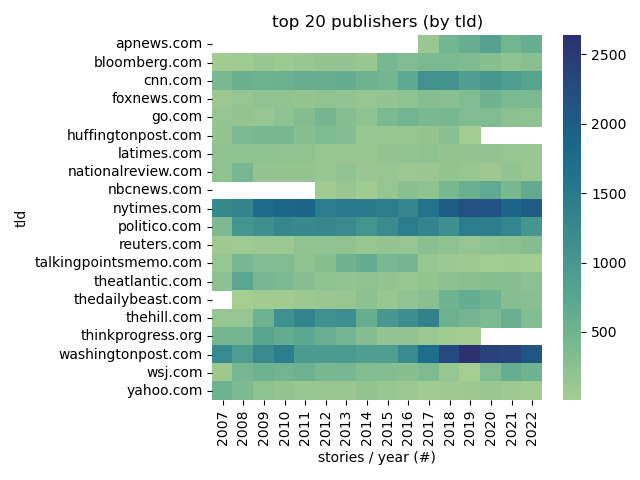

Top Publishers

==

# Descriptive Stats

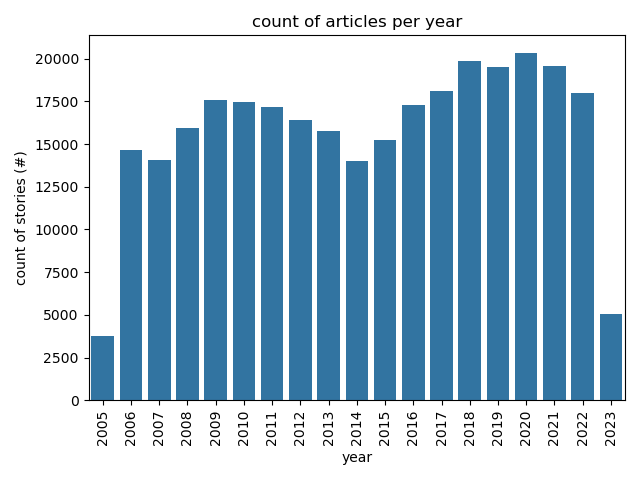

Articles Per Year

==

# Descriptive Stats

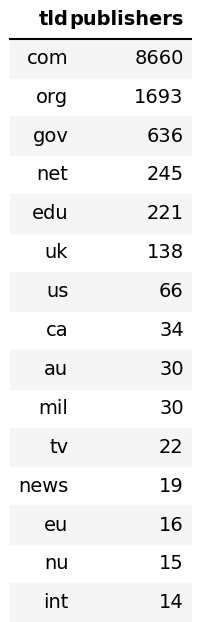

Common TLDs

==

# Descriptive Stats

Post Process

| key | value |

|:------------------|--------:|

| total stories | 251553 |

| total related | 815183 |

| publishers | 223 |

| authors | 23809 |

| max year | 2022 |

| min year | 2006 |

| top level domains | 234 |

===

# Experiments

1. **clustering** on link similarity.

2. **classification** on link similarity.

3. **classification** on sentence embedding.

4. **classification** on sentiment analysis.

5. **regression** on emotional classification over time and publication.

===

# Experiment 1

Setup

- Create one-hot encoding of links between publishers.

- Cluster the encoding.

- Expect similar publications in same cluster.

- Use PCA to visualize clusters.

Note:

Principle Component Analysis:

- a statistical technique for reducing the dimensionality of a dataset.

- linear transformation into a new coordinate system where (most of) the variation data can be described with fewer dimensions than the initial data.

==

# Experiment 1

One Hot Encoding

| publisher | nytimes| wsj| newsweek| ...|

|:----------|--------:|----:|--------:|----:|

| nytimes | 1| 1| 1| ...|

| wsj | 1| 1| 0| ...|

| newsweek | 0| 0| 1| ...|

| ... | ...| ...| ...| ...|

==

# Experiment 1

n-Hot Encoding

| publisher | nytimes| wsj| newsweek| ...|

|:----------|--------:|----:|--------:|----:|

| nytimes | 11| 1| 141| ...|

| wsj | 1| 31| 0| ...|

| newsweek | 0| 0| 1| ...|

| ... | ...| ...| ...| ...|

==

# Experiment 1

Normalized n-Hot Encoding

| publisher | nytimes| wsj| newsweek| ...|

|:----------|--------:|----:|--------:|----:|

| nytimes | 0| 0.4| 0.2| ...|

| wsj | 0.2| 0| 0.4| ...|

| newsweek | 0.0| 0.0| 0.0| ...|

| ... | ...| ...| ...| ...|

==

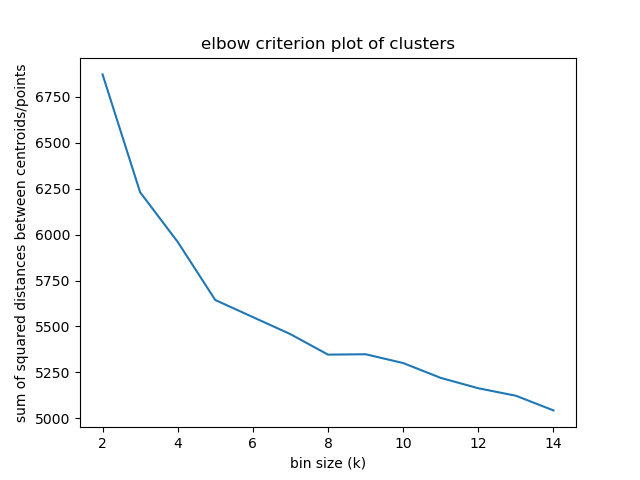

# Experiment 1

Elbow criterion

Note:

The elbow method looks at the percentage of explained variance as a function of the number of clusters:

One should choose a number of clusters so that adding another cluster doesn't give much better modeling of the data.

Percentage of variance explained is the ratio of the between-group variance to the total variance,

==

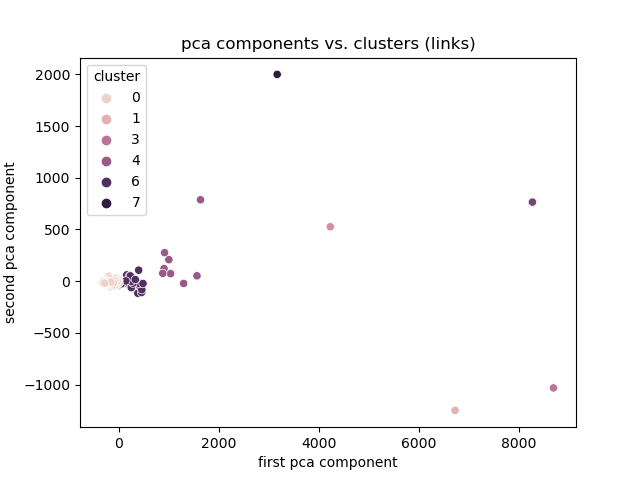

# Experiment 1

Link Magnitude

==

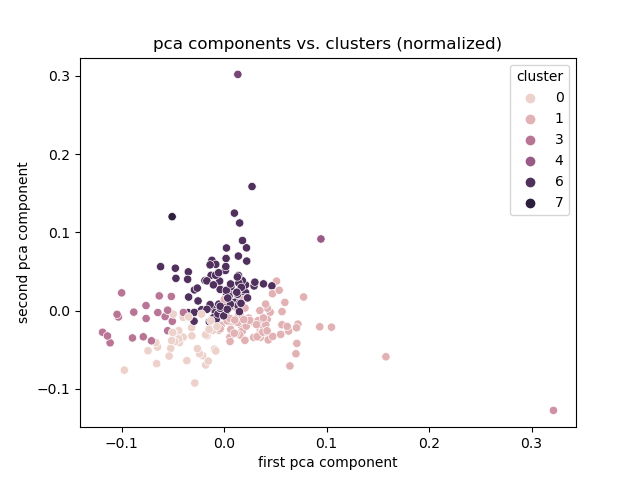

# Experiment 1

Normalized

==

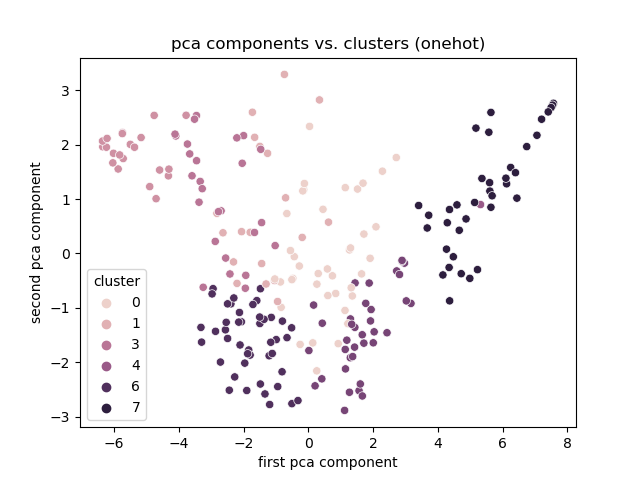

# Experiment 1

Onehot

==

# Experiment 1

Discussion

- Best encoding: One hot.

- Clusters based on total links otherwise.

- Clusters, but no explanation

- Limitation: need the link encoding to cluster.

- Smaller publishers might not link very much.

===

# Experiment 2

Setup

- Create features. :

- Publisher frequency.

- Reuse link encodings.

- Create classes:

- Join bias classifications.

- Train classifier.

Note:

==

# Experiment 2

Descriptive stats

| metric | value |

|:------------|:----------|

| publishers | 1582 |

| labels | 6 |

| left | 482 |

| center | 711 |

| right | 369 |

| agree range | [0.0-1.0] |

==

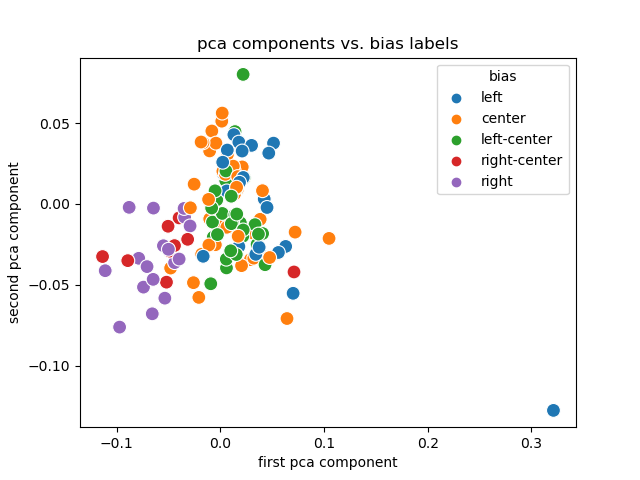

# Experiment 2

PCA + Labels

==

# Experiment 2

Discussion

- Link encodings (and their PCA) are useful.

- Labels are (sort of) separated and clustered.

- Creating them for smaller publishers is trivial.

==

# Experiment 2

Limitations

- Dependent on accurate rating.

- Ordinal ratings not available.

- Dependent on accurate joining across datasets.

- Entire publication is rated, not authors.

- Don't know what to do with community rating.

===

# Experiment 3

Setup

==

# Limitations

- Many different authors under the same publisher.

- Publishers use syndication.

- Bias ratings are biased.

===

# Questions

===

# References

[1]: Stewart, A.J. et al. 2020. Polarization under rising inequality and economic decline. Science Advances. 6, 50 (Dec. 2020), eabd4201. DOI:https://doi.org/10.1126/sciadv.abd4201.

Note: