v1.0 of presentation.

|

|

@ -2,3 +2,8 @@

|

||||||

*.swp

|

*.swp

|

||||||

__pycache__

|

__pycache__

|

||||||

tmp.py

|

tmp.py

|

||||||

|

.env

|

||||||

|

*.aux

|

||||||

|

*.log

|

||||||

|

*.out

|

||||||

|

tmp.*

|

||||||

|

|

|

||||||

|

|

@ -0,0 +1,11 @@

|

||||||

|

.PHONY:to_wwu

|

||||||

|

|

||||||

|

all: to_wwu

|

||||||

|

|

||||||

|

to_wwu:

|

||||||

|

rsync -avz ~/577/repo/docs/figures/ linux-04:/home/jensen33/Dev/studentweb/assets/static/577/

|

||||||

|

scp ~/577/repo/docs/presentation.md linux-04:/home/jensen33/Dev/studentweb/content/577/contents.lr

|

||||||

|

scp ~/Dev/www.publicmatt.com/models/slides.ini linux-04:/home/jensen33/Dev/studentweb/models/

|

||||||

|

scp ~/Dev/www.publicmatt.com/templates/slides.html linux-04:/home/jensen33/Dev/studentweb/templates/

|

||||||

|

rsync -avz ~/Dev/www.publicmatt.com/assets/static/revealjs linux-04:/home/jensen33/Dev/studentweb/assets/static/

|

||||||

|

ssh linux-04 cd /home/jensen33/Dev/studentweb \; make

|

||||||

|

|

@ -0,0 +1,3 @@

|

||||||

|

paper.pdf: paper.tex

|

||||||

|

pdflatex $^ -o $@

|

||||||

|

evince $@

|

||||||

|

After Width: | Height: | Size: 47 KiB |

|

After Width: | Height: | Size: 22 KiB |

|

After Width: | Height: | Size: 24 KiB |

|

After Width: | Height: | Size: 20 KiB |

|

After Width: | Height: | Size: 33 KiB |

|

After Width: | Height: | Size: 29 KiB |

|

After Width: | Height: | Size: 48 KiB |

|

After Width: | Height: | Size: 61 KiB |

|

After Width: | Height: | Size: 51 KiB |

|

After Width: | Height: | Size: 22 KiB |

|

After Width: | Height: | Size: 54 KiB |

|

|

@ -0,0 +1,61 @@

|

||||||

|

\documentclass{article}

|

||||||

|

\usepackage{multicol}

|

||||||

|

\usepackage{hyperref}

|

||||||

|

\title{Data Mining CS 571}

|

||||||

|

\author{Matt Jensen}

|

||||||

|

\date{2023-04-25}

|

||||||

|

|

||||||

|

\begin{document}

|

||||||

|

\maketitle

|

||||||

|

|

||||||

|

\section*{Abstract}

|

||||||

|

|

||||||

|

News organizations have been repeatedly accused of being partisan.

|

||||||

|

Additionally, they have been accused of polarizing dicussion to drive up revenue and engagement.

|

||||||

|

This paper seeks to quantify those claims by classifying the degree to which news headlines have become more emotionally charged of time.

|

||||||

|

A secondary goal is the investigate whether news organization have been uniformly polarized, or if one pole has been 'moving' more rapidly away from the 'middle'.

|

||||||

|

This analysis will probe to what degree has the \href{https://en.wikipedia.org/wiki/Overton_window}{Overton Window} has shifted in the media.

|

||||||

|

Naom Chomsky had a hypothesis about manufactured consent that is beyond the scope of this paper, so we will restrict our analysis to the presence of agenda instead of the cause of it.

|

||||||

|

|

||||||

|

\begin{multicols}{2}

|

||||||

|

|

||||||

|

\section{Data Preparation}

|

||||||

|

The subject of analysis is a set of news article headlines scraped from the news aggregation site \href{https://mememorandum.com}{Memeorandum} for news stories from 2006 to 2022.

|

||||||

|

Each news article has a title, author, description, publisher, publish date, url and related discussions.

|

||||||

|

The site also has a concept of references, where a main, popular story may be covered by other sources.

|

||||||

|

This link association might be used to support one or more of the hypothesis of the main analysis.

|

||||||

|

After scraping the site, the data will need to be deduplicated and normalized to minimize storage costs and processing errors.

|

||||||

|

What remains after these cleaning steps is approximitely 6,400 days of material, 300,000 distinct headlines from 21,000 publishers and 34,000 authors used in the study.

|

||||||

|

|

||||||

|

\section{Missing Data Policy}

|

||||||

|

|

||||||

|

The largest data policy that will have to be dealt with is news organizations that share the same parent company, but might have slightly different names.

|

||||||

|

Wall Street Journal news is drastically different than their opinion section.

|

||||||

|

Other organizations have slightly different names for the same thing and a product of the aggregation service and not due to any real difference.

|

||||||

|

Luckily, most of the anaylsis is operating on the content of the news headlines, which do not suffer from this data impurity.

|

||||||

|

|

||||||

|

\section{Classification Task}

|

||||||

|

|

||||||

|

The classification of news titles into emotional categories was accomplished by using a pretrained large langauge model from \href{https://huggingface.co/arpanghoshal/EmoRoBERTa}{HuggingFace}.

|

||||||

|

This model was trained on \href{https://ai.googleblog.com/2021/10/goemotions-dataset-for-fine-grained.html}{a dataset curated and published by Google} which manually classified a collection of 58,000 comments into 28 emotions.

|

||||||

|

The classes for each article will be derived by tokenizing the title and running the model over the tokens, then grabbing the largest probabilty class from the output.

|

||||||

|

|

||||||

|

The data has been discretized into years.

|

||||||

|

Additionally, the publishers will have been discretized based of either principle component analysis on link similarity or based on the bias ratings of \href{https://www.allsides.com/media-bias/ratings}{All Sides}.

|

||||||

|

Given that the features of the dataset are sparse, it is not expected to have any useless attributes, unless the original hypothesis of a temporal trend proving to be false.

|

||||||

|

Of the features used in the analysis, there are enough data points that null or missing values can safely be excluded.

|

||||||

|

|

||||||

|

\section{Experiments}

|

||||||

|

|

||||||

|

No computational experiment have been done yet.

|

||||||

|

Generating the tokenized text, the word embedding and the emotional sentiment analysis have made up the bulk of the work thus far.

|

||||||

|

The bias ratings do not cover all publisher in the dataset, so the number of articles without a bias rating from their publisher will have to be calculated.

|

||||||

|

If it is less than 30\% of the articles, it might not make sense to use the bias ratings.

|

||||||

|

The creation and reduction of the link graph with principle component analysis will need to be done to visualize the relationship between related publishers.

|

||||||

|

|

||||||

|

\section{Results}

|

||||||

|

\textbf{TODO.}

|

||||||

|

|

||||||

|

\end{multicols}

|

||||||

|

|

||||||

|

\end{document}

|

||||||

|

|

@ -0,0 +1,552 @@

|

||||||

|

_model: slides

|

||||||

|

---

|

||||||

|

|

||||||

|

title: CSCI 577 - Data Mining

|

||||||

|

|

||||||

|

---

|

||||||

|

body:

|

||||||

|

|

||||||

|

# Political Polarization

|

||||||

|

|

||||||

|

Matt Jensen

|

||||||

|

|

||||||

|

===

|

||||||

|

|

||||||

|

# Hypothesis

|

||||||

|

|

||||||

|

Political polarization is rising, and news articles are a proxy measure.

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Is this reasonable?

|

||||||

|

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Why is polarization rising?

|

||||||

|

|

||||||

|

Not my job, but there's research<sup>[ref](#references)</sup> to support it

|

||||||

|

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Sub-hypothesis

|

||||||

|

|

||||||

|

- The polarization increases near elections. <!-- .element: class="fragment" -->

|

||||||

|

- The polarization is not evenly distributed across publishers. <!-- .element: class="fragment" -->

|

||||||

|

- The polarization is not evenly distributed across political specturm. <!-- .element: class="fragment" -->

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Sub-sub-hypothesis

|

||||||

|

|

||||||

|

- Similarly polarized publishers link to each other. <!-- .element: class="fragment" -->

|

||||||

|

- 'Mainstream' media uses more neutral titles. <!-- .element: class="fragment" -->

|

||||||

|

- Highly polarized publications don't last as long. <!-- .element: class="fragment" -->

|

||||||

|

|

||||||

|

===

|

||||||

|

|

||||||

|

# Data Source(s)

|

||||||

|

|

||||||

|

memeorandum.com <!-- .element: class="fragment" -->

|

||||||

|

|

||||||

|

allsides.com <!-- .element: class="fragment" -->

|

||||||

|

|

||||||

|

huggingface.com <!-- .element: class="fragment" -->

|

||||||

|

|

||||||

|

===

|

||||||

|

|

||||||

|

<section data-background-iframe="https://www.memeorandum.com" data-background-interactive></section>

|

||||||

|

|

||||||

|

===

|

||||||

|

|

||||||

|

# memeorandum.com

|

||||||

|

|

||||||

|

- News aggregation site. <!-- .element: class="fragment" -->

|

||||||

|

- Was really famous before Google News. <!-- .element: class="fragment" -->

|

||||||

|

- Still aggregates sites today. <!-- .element: class="fragment" -->

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Why Memeorandum?

|

||||||

|

|

||||||

|

- Behavioral: I only read titles sometimes. (doom scrolling). <!-- .element class="fragment" -->

|

||||||

|

- Behavioral: It's my source of news (with sister site TechMeme.com). <!-- .element class="fragment" -->

|

||||||

|

- Convenient: most publishers block bots. <!-- .element class="fragment" -->

|

||||||

|

- Convenient: dead simple html to parse. <!-- .element class="fragment" -->

|

||||||

|

- Archival: all headlines from 2006 forward. <!-- .element class="fragment" -->

|

||||||

|

- Archival: automated, not editorialized. <!-- .element class="fragment" -->

|

||||||

|

|

||||||

|

===

|

||||||

|

|

||||||

|

<section data-background-iframe="https://www.allsides.com/media-bias/ratings" data-background-interactive></section>

|

||||||

|

|

||||||

|

===

|

||||||

|

|

||||||

|

# AllSides.com

|

||||||

|

|

||||||

|

- Rates news publications as left, center or right. <!-- .element: class="fragment" -->

|

||||||

|

- Ratings combine: <!-- .element: class="fragment" -->

|

||||||

|

- blind bias surveys.

|

||||||

|

- editorial reviews.

|

||||||

|

- third party research.

|

||||||

|

- community voting.

|

||||||

|

- Originally scraped website, but direct access eventually. <!-- .element: class="fragment" -->

|

||||||

|

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Why AllSides?

|

||||||

|

|

||||||

|

- Behavioral: One of the first google results on bias apis. <!-- .element class="fragment" -->

|

||||||

|

- Convenient: Ordinal ratings [-2: very left, 2: very right]. <!-- .element class="fragment" -->

|

||||||

|

- Convenient: Easy format. <!-- .element class="fragment" -->

|

||||||

|

- Archival: Covers 1400 publishers. <!-- .element class="fragment" -->

|

||||||

|

|

||||||

|

===

|

||||||

|

|

||||||

|

<section data-background-iframe="https://huggingface.co/models" data-background-interactive></section>

|

||||||

|

|

||||||

|

===

|

||||||

|

|

||||||

|

# HuggingFace.com

|

||||||

|

|

||||||

|

- Deep Learning library. <!-- .element: class="fragment" -->

|

||||||

|

- Lots of pretrained models. <!-- .element: class="fragment" -->

|

||||||

|

- Easy, off the shelf word/sentence embeddings and text classification models. <!-- .element: class="fragment" -->

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Why HuggingFace?

|

||||||

|

|

||||||

|

- Behavioral: Language Models are HOT right now. <!-- .element: class="fragment" -->

|

||||||

|

- Behavioral: The dataset needed more features.<!-- .element: class="fragment" -->

|

||||||

|

- Convenient: Literally 5 lines of python.<!-- .element: class="fragment" -->

|

||||||

|

- Convenient: Testing different model performance was easy.<!-- .element: class="fragment" -->

|

||||||

|

- Archival: Lots of pretrained classification tasks.<!-- .element: class="fragment" -->

|

||||||

|

|

||||||

|

===

|

||||||

|

|

||||||

|

# Data Structures

|

||||||

|

Stories

|

||||||

|

|

||||||

|

- Top level stories. <!-- .element: class="fragment" -->

|

||||||

|

- title.

|

||||||

|

- publisher.

|

||||||

|

- author.

|

||||||

|

- Related discussion. <!-- .element: class="fragment" -->

|

||||||

|

- publisher.

|

||||||

|

- uses 'parent' story as a source.

|

||||||

|

- Stream of stories (changes constantly). <!-- .element: class="fragment" -->

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Data Structures

|

||||||

|

Bias

|

||||||

|

|

||||||

|

- Per publisher. <!-- .element: class="fragment" -->

|

||||||

|

- name.

|

||||||

|

- label.

|

||||||

|

- agree/disagree vote by community.

|

||||||

|

- Name could be semi-automatically joined to stories. <!-- .element: class="fragment" -->

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Data Structures

|

||||||

|

Embeddings

|

||||||

|

|

||||||

|

- Per story title. <!-- .element: class="fragment" -->

|

||||||

|

- sentence embedding (n, 384).

|

||||||

|

- sentiment classification (n, 1).

|

||||||

|

- emotional classification (n, 1).

|

||||||

|

- ~ 1 hour of inference time to map story titles and descriptions. <!-- .element: class="fragment" -->

|

||||||

|

|

||||||

|

===

|

||||||

|

|

||||||

|

# Data Collection

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Data Collection

|

||||||

|

|

||||||

|

Story Scraper (simplified)

|

||||||

|

|

||||||

|

```python

|

||||||

|

day = timedelta(days=1)

|

||||||

|

cur = date(2005, 10, 1)

|

||||||

|

end = date.today()

|

||||||

|

while cur <= end:

|

||||||

|

cur = cur + day

|

||||||

|

save_as = output_dir / f"{cur.strftime('%y-%m-%d')}.html"

|

||||||

|

url = f"https://www.memeorandum.com/{cur.strftime('%y%m%d')}/h2000"

|

||||||

|

r = requests.get(url)

|

||||||

|

with open(save_as, 'w') as f:

|

||||||

|

f.write(r.text)

|

||||||

|

```

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Data Collection

|

||||||

|

Bias Scraper (hard)

|

||||||

|

|

||||||

|

```python

|

||||||

|

...

|

||||||

|

bias_html = DATA_DIR / 'allsides.html'

|

||||||

|

parser = etree.HTMLParser()

|

||||||

|

tree = etree.parse(str(bias_html), parser)

|

||||||

|

root = tree.getroot()

|

||||||

|

rows = root.xpath('//table[contains(@class,"views-table")]/tbody/tr')

|

||||||

|

|

||||||

|

ratings = []

|

||||||

|

for row in rows:

|

||||||

|

rating = dict()

|

||||||

|

...

|

||||||

|

```

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Data Collection

|

||||||

|

Bias Scraper (easy)

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Data Collection

|

||||||

|

Embeddings (easy)

|

||||||

|

|

||||||

|

```python

|

||||||

|

# table = ...

|

||||||

|

tokenizer = AutoTokenizer.from_pretrained("roberta-base")

|

||||||

|

model = AutoModel.from_pretrained("roberta-base")

|

||||||

|

|

||||||

|

for chunk in table:

|

||||||

|

tokens = tokenizer(chunk, add_special_tokens = True, truncation = True, padding = "max_length", max_length=92, return_attention_mask = True, return_tensors = "pt")

|

||||||

|

outputs = model(**tokens)

|

||||||

|

embeddings = outputs.last_hidden_state.detach().numpy()

|

||||||

|

...

|

||||||

|

```

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Data Collection

|

||||||

|

Classification Embeddings (medium)

|

||||||

|

|

||||||

|

```python

|

||||||

|

...

|

||||||

|

outputs = model(**tokens)[0].detach().numpy()

|

||||||

|

scores = 1 / (1 + np.exp(-outputs)) # Sigmoid

|

||||||

|

class_ids = np.argmax(scores, axis=1)

|

||||||

|

for i, class_id in enumerate(class_ids):

|

||||||

|

results.append({"story_id": ids[i], "label" : model.config.id2label[class_id]})

|

||||||

|

...

|

||||||

|

```

|

||||||

|

|

||||||

|

===

|

||||||

|

|

||||||

|

# Data Selection

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Data Selection

|

||||||

|

Stories

|

||||||

|

|

||||||

|

- Clip the first and last full year of stories. <!-- .element: class="fragment" -->

|

||||||

|

- Remove duplicate stories (big stories span multiple days). <!-- .element: class="fragment" -->

|

||||||

|

|

||||||

|

==

|

||||||

|

# Data Selection

|

||||||

|

|

||||||

|

Publishers

|

||||||

|

|

||||||

|

- Combine subdomains of stories. <!-- .element: class="fragment" -->

|

||||||

|

- blog.washingtonpost.com and washingtonpost.com are considered the same publisher.

|

||||||

|

- This could be bad. For example: opinion.wsj.com != wsj.com.

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Data Selection

|

||||||

|

|

||||||

|

Links

|

||||||

|

|

||||||

|

- Select only stories with publishers whose story had been a 'parent' ('original publishers'). <!-- .element: class="fragment" -->

|

||||||

|

- Eliminates small blogs and non-original news.

|

||||||

|

- Eliminate publishers without links to original publishers. <!-- .element: class="fragment" -->

|

||||||

|

- Eliminate silo'ed publications.

|

||||||

|

- Link matrix is square and low'ish dimensional.

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Data Selection

|

||||||

|

|

||||||

|

Bias

|

||||||

|

|

||||||

|

- Keep all ratings, even ones with low agree/disagree ratio.

|

||||||

|

- Join datasets on publisher name.

|

||||||

|

- Not automatic (look up Named Entity Recognition). <!-- .element: class="fragment" -->

|

||||||

|

- Started with 'jaro winkler similarity' then manually from there.

|

||||||

|

- Use numeric values

|

||||||

|

- [left: -2, left-center: -1, ...]

|

||||||

|

|

||||||

|

===

|

||||||

|

|

||||||

|

# Descriptive Stats

|

||||||

|

|

||||||

|

Raw

|

||||||

|

|

||||||

|

| metric | value |

|

||||||

|

|:------------------|--------:|

|

||||||

|

| total stories | 299714 |

|

||||||

|

| total related | 960111 |

|

||||||

|

| publishers | 7031 |

|

||||||

|

| authors | 34346 |

|

||||||

|

| max year | 2023 |

|

||||||

|

| min year | 2005 |

|

||||||

|

| top level domains | 7063 |

|

||||||

|

|

||||||

|

==

|

||||||

|

# Descriptive Stats

|

||||||

|

|

||||||

|

Stories Per Publisher

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Descriptive Stats

|

||||||

|

|

||||||

|

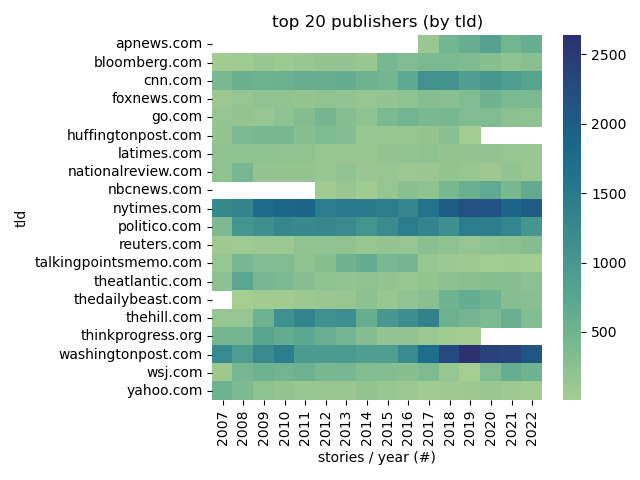

Top Publishers

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

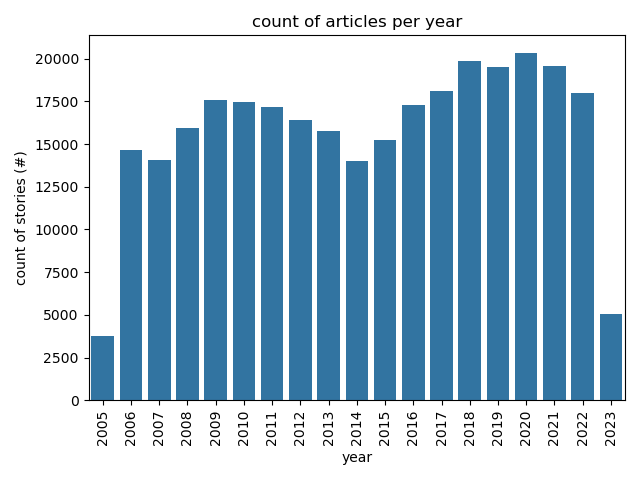

# Descriptive Stats

|

||||||

|

|

||||||

|

Articles Per Year

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

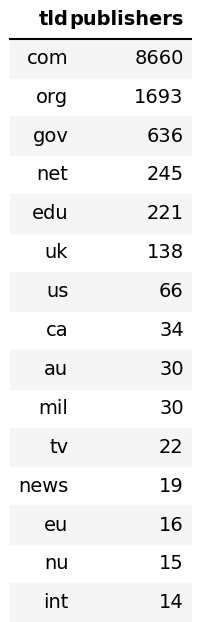

# Descriptive Stats

|

||||||

|

|

||||||

|

Common TLDs

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Descriptive Stats

|

||||||

|

|

||||||

|

Post Process

|

||||||

|

|

||||||

|

| key | value |

|

||||||

|

|:------------------|--------:|

|

||||||

|

| total stories | 251553 |

|

||||||

|

| total related | 815183 |

|

||||||

|

| publishers | 223 |

|

||||||

|

| authors | 23809 |

|

||||||

|

| max year | 2022 |

|

||||||

|

| min year | 2006 |

|

||||||

|

| top level domains | 234 |

|

||||||

|

|

||||||

|

===

|

||||||

|

# Experiments

|

||||||

|

|

||||||

|

1. **clustering** on link similarity. <!-- .element: class="fragment" -->

|

||||||

|

2. **classification** on link similarity. <!-- .element: class="fragment" -->

|

||||||

|

3. **classification** on sentence embedding. <!-- .element: class="fragment" -->

|

||||||

|

4. **classification** on sentiment analysis. <!-- .element: class="fragment" -->

|

||||||

|

5. **regression** on emotional classification over time and publication. <!-- .element: class="fragment" -->

|

||||||

|

|

||||||

|

===

|

||||||

|

# Experiment 1

|

||||||

|

|

||||||

|

Setup

|

||||||

|

|

||||||

|

- Create one-hot encoding of links between publishers. <!-- .element: class="fragment" -->

|

||||||

|

- Cluster the encoding. <!-- .element: class="fragment" -->

|

||||||

|

- Expect similar publications in same cluster. <!-- .element: class="fragment" -->

|

||||||

|

- Use PCA to visualize clusters. <!-- .element: class="fragment" -->

|

||||||

|

|

||||||

|

Note:

|

||||||

|

Principle Component Analysis:

|

||||||

|

- a statistical technique for reducing the dimensionality of a dataset.

|

||||||

|

- linear transformation into a new coordinate system where (most of) the variation data can be described with fewer dimensions than the initial data.

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 1

|

||||||

|

|

||||||

|

One Hot Encoding

|

||||||

|

|

||||||

|

| publisher | nytimes| wsj| newsweek| ...|

|

||||||

|

|:----------|--------:|----:|--------:|----:|

|

||||||

|

| nytimes | 1| 1| 1| ...|

|

||||||

|

| wsj | 1| 1| 0| ...|

|

||||||

|

| newsweek | 0| 0| 1| ...|

|

||||||

|

| ... | ...| ...| ...| ...|

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 1

|

||||||

|

|

||||||

|

n-Hot Encoding

|

||||||

|

|

||||||

|

| publisher | nytimes| wsj| newsweek| ...|

|

||||||

|

|:----------|--------:|----:|--------:|----:|

|

||||||

|

| nytimes | 11| 1| 141| ...|

|

||||||

|

| wsj | 1| 31| 0| ...|

|

||||||

|

| newsweek | 0| 0| 1| ...|

|

||||||

|

| ... | ...| ...| ...| ...|

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 1

|

||||||

|

|

||||||

|

Normalized n-Hot Encoding

|

||||||

|

|

||||||

|

| publisher | nytimes| wsj| newsweek| ...|

|

||||||

|

|:----------|--------:|----:|--------:|----:|

|

||||||

|

| nytimes | 0| 0.4| 0.2| ...|

|

||||||

|

| wsj | 0.2| 0| 0.4| ...|

|

||||||

|

| newsweek | 0.0| 0.0| 0.0| ...|

|

||||||

|

| ... | ...| ...| ...| ...|

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 1

|

||||||

|

|

||||||

|

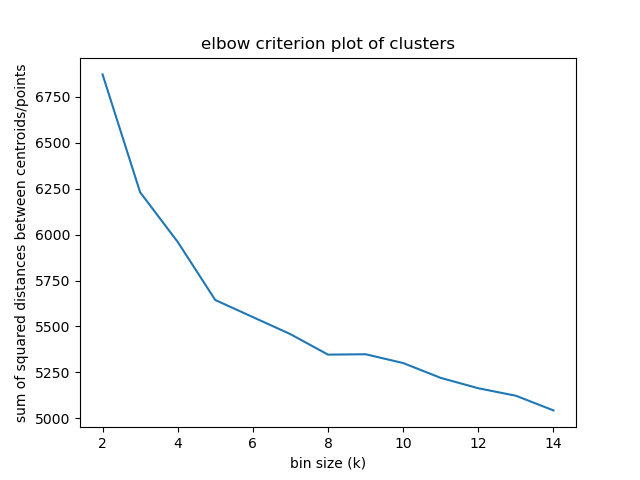

Elbow criterion

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

Note:

|

||||||

|

|

||||||

|

The elbow method looks at the percentage of explained variance as a function of the number of clusters:

|

||||||

|

|

||||||

|

One should choose a number of clusters so that adding another cluster doesn't give much better modeling of the data.

|

||||||

|

|

||||||

|

Percentage of variance explained is the ratio of the between-group variance to the total variance,

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 1

|

||||||

|

|

||||||

|

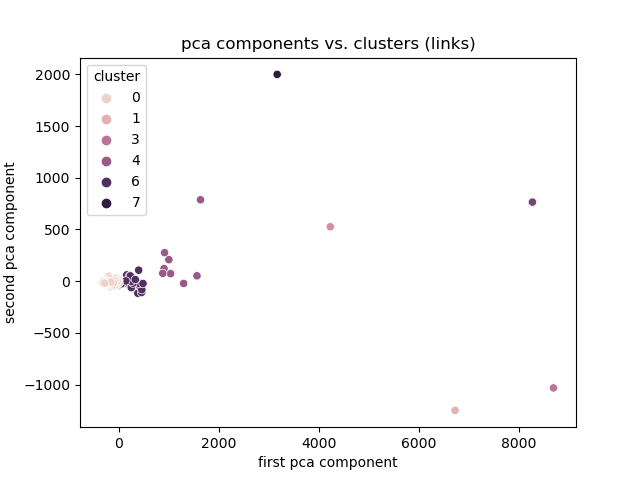

Link Magnitude

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 1

|

||||||

|

|

||||||

|

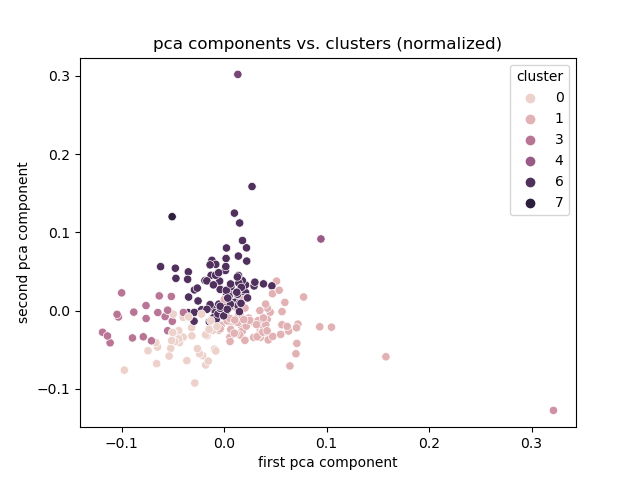

Normalized

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 1

|

||||||

|

|

||||||

|

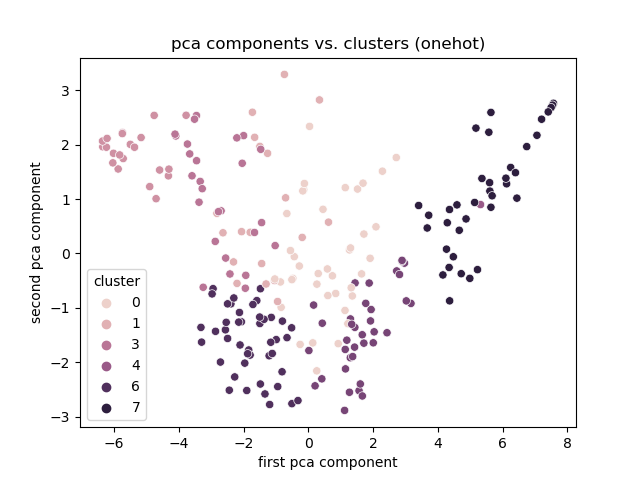

Onehot

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 1

|

||||||

|

|

||||||

|

Discussion

|

||||||

|

|

||||||

|

- Best encoding: One hot. <!-- .element: class="fragment" -->

|

||||||

|

- Clusters based on total links otherwise.

|

||||||

|

- Clusters, but no explanation

|

||||||

|

- Limitation: need the link encoding to cluster.

|

||||||

|

- Smaller publishers might not link very much.

|

||||||

|

|

||||||

|

===

|

||||||

|

|

||||||

|

# Experiment 2

|

||||||

|

|

||||||

|

Setup

|

||||||

|

|

||||||

|

- Create features. <!-- .element: class="fragment" -->:

|

||||||

|

- Publisher frequency.

|

||||||

|

- Reuse link encodings.

|

||||||

|

- Create classes: <!-- .element: class="fragment" -->

|

||||||

|

- Join bias classifications.

|

||||||

|

- Train classifier. <!-- .element: class="fragment" -->

|

||||||

|

|

||||||

|

Note:

|

||||||

|

|

||||||

|

==

|

||||||

|

# Experiment 2

|

||||||

|

Descriptive stats

|

||||||

|

|

||||||

|

| metric | value |

|

||||||

|

|:------------|:----------|

|

||||||

|

| publishers | 1582 |

|

||||||

|

| labels | 6 |

|

||||||

|

| left | 482 |

|

||||||

|

| center | 711 |

|

||||||

|

| right | 369 |

|

||||||

|

| agree range | [0.0-1.0] |

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

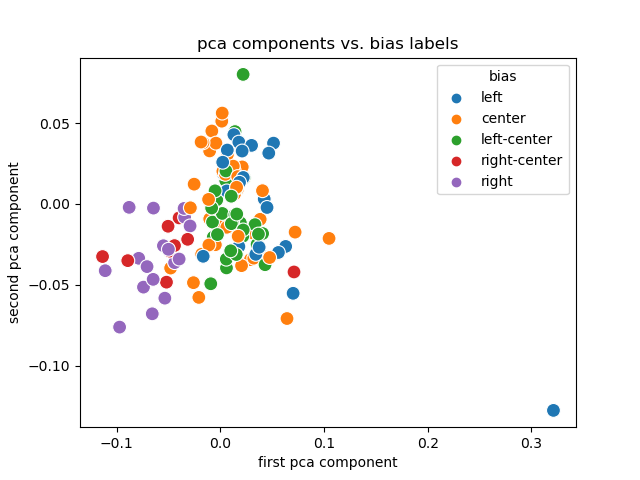

# Experiment 2

|

||||||

|

|

||||||

|

PCA + Labels

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 2

|

||||||

|

|

||||||

|

Discussion

|

||||||

|

|

||||||

|

- Link encodings (and their PCA) are useful. <!-- .element: class="fragment" -->

|

||||||

|

- Labels are (sort of) separated and clustered.

|

||||||

|

- Creating them for smaller publishers is trivial.

|

||||||

|

==

|

||||||

|

|

||||||

|

# Experiment 2

|

||||||

|

|

||||||

|

Limitations

|

||||||

|

|

||||||

|

- Dependent on accurate rating. <!-- .element: class="fragment" -->

|

||||||

|

- Ordinal ratings not available. <!-- .element: class="fragment" -->

|

||||||

|

- Dependent on accurate joining across datasets. <!-- .element: class="fragment" -->

|

||||||

|

- Entire publication is rated, not authors. <!-- .element: class="fragment" -->

|

||||||

|

- Don't know what to do with community rating. <!-- .element: class="fragment" -->

|

||||||

|

|

||||||

|

===

|

||||||

|

|

||||||

|

# Experiment 3

|

||||||

|

|

||||||

|

Setup

|

||||||

|

|

||||||

|

==

|

||||||

|

|

||||||

|

# Limitations

|

||||||

|

|

||||||

|

- Many different authors under the same publisher. <!-- .element: class="fragment" -->

|

||||||

|

- Publishers use syndication. <!-- .element: class="fragment" -->

|

||||||

|

- Bias ratings are biased. <!-- .element: class="fragment" -->

|

||||||

|

|

||||||

|

===

|

||||||

|

|

||||||

|

# Questions

|

||||||

|

|

||||||

|

===

|

||||||

|

|

||||||

|

<!-- .section: id="references" -->

|

||||||

|

|

||||||

|

# References

|

||||||

|

|

||||||

|

[1]: Stewart, A.J. et al. 2020. Polarization under rising inequality and economic decline. Science Advances. 6, 50 (Dec. 2020), eabd4201. DOI:https://doi.org/10.1126/sciadv.abd4201.

|

||||||

|

|

||||||

|

Note:

|

||||||

218

src/bias.py

|

|

@ -1,12 +1,12 @@

|

||||||

import click

|

import click

|

||||||

from data import connect

|

from data.main import connect

|

||||||

import pandas as pd

|

import pandas as pd

|

||||||

from lxml import etree

|

from lxml import etree

|

||||||

from pathlib import Path

|

from pathlib import Path

|

||||||

import os

|

import os

|

||||||

import csv

|

import csv

|

||||||

|

|

||||||

def map(rating:str) -> int:

|

def label_to_int(rating:str) -> int:

|

||||||

|

|

||||||

mapping = {

|

mapping = {

|

||||||

'left' : 0,

|

'left' : 0,

|

||||||

|

|

@ -19,20 +19,18 @@ def map(rating:str) -> int:

|

||||||

|

|

||||||

return mapping[rating]

|

return mapping[rating]

|

||||||

|

|

||||||

|

def int_to_label(class_id: int) -> str:

|

||||||

|

mapping = {

|

||||||

|

0 : 'left',

|

||||||

|

1 : 'left-center',

|

||||||

|

2 : 'center',

|

||||||

|

3 : 'right-center',

|

||||||

|

4 : 'right',

|

||||||

|

-1 : 'allsides',

|

||||||

|

}

|

||||||

|

return mapping[class_id]

|

||||||

|

|

||||||

@click.command(name="bias:load")

|

|

||||||

def load() -> None:

|

|

||||||

DB = connect()

|

|

||||||

DATA_DIR = Path(os.environ['DATA_MINING_DATA_DIR'])

|

|

||||||

f = str(DATA_DIR / "bias_ratings.csv")

|

|

||||||

|

|

||||||

DB.sql(f"""

|

|

||||||

create table bias_ratings as

|

|

||||||

select

|

|

||||||

row_number() over(order by b.publisher) as id

|

|

||||||

,b.*

|

|

||||||

from read_csv_auto('{f}') b

|

|

||||||

""")

|

|

||||||

@click.command(name="bias:normalize")

|

@click.command(name="bias:normalize")

|

||||||

def normalize() -> None:

|

def normalize() -> None:

|

||||||

DB = connect()

|

DB = connect()

|

||||||

|

|

@ -41,133 +39,48 @@ def normalize() -> None:

|

||||||

CREATE OR REPLACE TABLE publisher_bias AS

|

CREATE OR REPLACE TABLE publisher_bias AS

|

||||||

WITH cte AS (

|

WITH cte AS (

|

||||||

SELECT

|

SELECT

|

||||||

p.id

|

p.id as publisher_id

|

||||||

|

,b.id as bias_id

|

||||||

,b.bias as label

|

,b.bias as label

|

||||||

,JARO_WINKLER_SIMILARITY(LOWER(p.name), LOWER(b.publisher)) as similarity

|

,JARO_WINKLER_SIMILARITY(LOWER(p.name), LOWER(b.publisher)) as similarity

|

||||||

FROM bias_ratings b

|

FROM bias_ratings b

|

||||||

JOIN publishers p

|

JOIN top.publishers p

|

||||||

ON JARO_WINKLER_SIMILARITY(LOWER(p.name), LOWER(b.publisher)) > 0.95

|

ON JARO_WINKLER_SIMILARITY(LOWER(p.name), LOWER(b.publisher)) > 0.95

|

||||||

),ranked AS (

|

),ranked AS (

|

||||||

SELECT

|

SELECT

|

||||||

id

|

publisher_id

|

||||||

|

,bias_id

|

||||||

,label

|

,label

|

||||||

,similarity

|

,similarity

|

||||||

,ROW_NUMBER() OVER(PARTITION BY id ORDER BY similarity DESC) AS rn

|

,ROW_NUMBER() OVER(PARTITION BY publisher_id ORDER BY similarity DESC) AS rn

|

||||||

FROM cte

|

FROM cte

|

||||||

)

|

)

|

||||||

SELECT

|

SELECT

|

||||||

id

|

publisher_id

|

||||||

,label

|

,label

|

||||||

|

,bias_id

|

||||||

FROM ranked

|

FROM ranked

|

||||||

WHERE ranked.rn = 1

|

WHERE ranked.rn = 1

|

||||||

""")

|

""")

|

||||||

|

|

||||||

|

mapping = [

|

||||||

|

{'label' :'left' , 'ordinal': -2},

|

||||||

|

{'label' :'left-center' , 'ordinal': -1},

|

||||||

|

{'label' :'center' , 'ordinal': 0},

|

||||||

|

{'label' :'right-center' , 'ordinal': 1},

|

||||||

|

{'label' :'right' , 'ordinal': 2},

|

||||||

|

]

|

||||||

|

mapping = pd.DataFrame(mapping)

|

||||||

|

|

||||||

DB.sql("""

|

DB.query("alter table bias_ratings add column ordinal int")

|

||||||

with cte as (

|

|

||||||

select

|

DB.query("""

|

||||||

s.publisher_id

|

update bias_ratings b

|

||||||

,count(1) as stories

|

set ordinal = o.ordinal

|

||||||

from stories s

|

FROM mapping o

|

||||||

group by s.publisher_id

|

WHERE o.label = b.bias

|

||||||

)

|

|

||||||

select

|

|

||||||

s.publisher

|

|

||||||

,s.stories

|

|

||||||

,b.publisher

|

|

||||||

,b.bias

|

|

||||||

from bias_ratings b

|

|

||||||

join cte s

|

|

||||||

on s.publisher = b.publisher

|

|

||||||

order by

|

|

||||||

stories desc

|

|

||||||

limit 15

|

|

||||||

""")

|

""")

|

||||||

|

|

||||||

DB.sql("""

|

|

||||||

with cte as (

|

|

||||||

select

|

|

||||||

s.publisher

|

|

||||||

,count(1) as stories

|

|

||||||

from stories s

|

|

||||||

group by s.publisher

|

|

||||||

)

|

|

||||||

select

|

|

||||||

sum(stories)

|

|

||||||

,avg(agree / disagree)

|

|

||||||

from bias_ratings b

|

|

||||||

join cte s

|

|

||||||

on s.publisher = b.publisher

|

|

||||||

""")

|

|

||||||

|

|

||||||

DB.sql("""

|

|

||||||

with cte as (

|

|

||||||

select

|

|

||||||

s.publisher

|

|

||||||

,count(1) as stories

|

|

||||||

from stories s

|

|

||||||

group by s.publisher

|

|

||||||

)

|

|

||||||

select

|

|

||||||

sum(s.stories) filter(where b.publisher is not null) as matched

|

|

||||||

,sum(s.stories) filter(where b.publisher is null) as unmatched

|

|

||||||

,cast(sum(s.stories) filter(where b.publisher is not null) as numeric)

|

|

||||||

/ sum(s.stories) filter(where b.publisher is null) as precent_matched

|

|

||||||

from bias_ratings b

|

|

||||||

right join cte s

|

|

||||||

on s.publisher = b.publisher

|

|

||||||

""")

|

|

||||||

|

|

||||||

DB.sql("""

|

|

||||||

select

|

|

||||||

*

|

|

||||||

from bias_ratings

|

|

||||||

where publisher ilike '%CNN%'

|

|

||||||

""")

|

|

||||||

|

|

||||||

@click.command(name='bias:debug')

|

|

||||||

def debug() -> None:

|

|

||||||

DB = connect()

|

|

||||||

DATA_DIR = Path(os.environ['DATA_MINING_DATA_DIR'])

|

|

||||||

f = str(DATA_DIR / "bias_ratings.csv")

|

|

||||||

|

|

||||||

DB.sql("""

|

|

||||||

with cte as (

|

|

||||||

select

|

|

||||||

outlet

|

|

||||||

,count(1) as stories

|

|

||||||

from stories

|

|

||||||

group by outlet

|

|

||||||

)

|

|

||||||

,total as (

|

|

||||||

select

|

|

||||||

sum(stories) as total

|

|

||||||

from cte

|

|

||||||

)

|

|

||||||

select

|

|

||||||

cte.outlet

|

|

||||||

,cte.stories

|

|

||||||

,bias.outlet

|

|

||||||

,bias.lean

|

|

||||||

,sum(100 * (cte.stories / cast(total.total as float))) over() as rep

|

|

||||||

,total.total

|

|

||||||

from cte

|

|

||||||

join bias

|

|

||||||

on jaro_winkler_similarity(bias.outlet, cte.outlet) > 0.9

|

|

||||||

cross join total.total

|

|

||||||

""")

|

|

||||||

|

|

||||||

DB.sql("""

|

|

||||||

select

|

|

||||||

outlet

|

|

||||||

,count(1) as stories

|

|

||||||

from stories

|

|

||||||

group by outlet

|

|

||||||

order by count(1) desc

|

|

||||||

limit 50

|

|

||||||

""")

|

|

||||||

|

|

||||||

outlets

|

|

||||||

|

|

||||||

@click.command(name='bias:parse')

|

@click.command(name='bias:parse')

|

||||||

def parse() -> None:

|

def parse() -> None:

|

||||||

|

|

@ -199,3 +112,64 @@ def parse() -> None:

|

||||||

ratings.append(rating)

|

ratings.append(rating)

|

||||||

df = pd.DataFrame(ratings)

|

df = pd.DataFrame(ratings)

|

||||||

df.to_csv(DATA_DIR / 'bias_ratings.csv', sep="|", index=False, quoting=csv.QUOTE_NONNUMERIC)

|

df.to_csv(DATA_DIR / 'bias_ratings.csv', sep="|", index=False, quoting=csv.QUOTE_NONNUMERIC)

|

||||||

|

|

||||||

|

@click.command(name="bias:load")

|

||||||

|

def load() -> None:

|

||||||

|

DB = connect()

|

||||||

|

DATA_DIR = Path(os.environ['DATA_MINING_DATA_DIR'])

|

||||||

|

f = str(DATA_DIR / "bias_ratings.csv")

|

||||||

|

|

||||||

|

DB.sql(f"""

|

||||||

|

CREATE TABLE bias_ratings as

|

||||||

|

select

|

||||||

|

row_number() over(order by b.publisher) as id

|

||||||

|

,b.*

|

||||||

|

from read_csv_auto('{f}') b

|

||||||

|

""")

|

||||||

|

|

||||||

|

@click.command('bias:export')

|

||||||

|

def export():

|

||||||

|

data_path = Path(os.environ['DATA_MINING_DATA_DIR'])

|

||||||

|

|

||||||

|

DB = connect()

|

||||||

|

all_bias = DB.query("""

|

||||||

|

SELECT

|

||||||

|

id as bias_id

|

||||||

|

,publisher as name

|

||||||

|

,bias as label

|

||||||

|

FROM bias_ratings

|

||||||

|

ORDER by agree desc

|

||||||

|

""")

|

||||||

|

all_bias.df().to_csv(data_path / 'TMP_publisher_bias.csv', sep="|", index=False)

|

||||||

|

mapped_bias = DB.query("""

|

||||||

|

SELECT

|

||||||

|

p.id as publisher_id

|

||||||

|

,p.name as name

|

||||||

|

,p.tld as tld

|

||||||

|

,b.label as bias

|

||||||

|

,b.bias_id as bias_id

|

||||||

|

FROM top.publishers p

|

||||||

|

LEFT JOIN publisher_bias b

|

||||||

|

ON b.publisher_id = p.id

|

||||||

|

""")

|

||||||

|

mapped_bias.df().to_csv(data_path / 'TMP_publisher_bias_to_load.csv', sep="|", index=False)

|

||||||

|

DB.close()

|

||||||

|

|

||||||

|

@click.command('bias:import-mapped')

|

||||||

|

def import_mapped():

|

||||||

|

data_path = Path(os.environ['DATA_MINING_DATA_DIR'])

|

||||||

|

table_name = "top.publisher_bias"

|

||||||

|

|

||||||

|

DB = connect()

|

||||||

|

df = pd.read_csv(data_path / 'TMP_publisher_bias_to_load.csv', sep="|")

|

||||||

|

|

||||||

|

DB.query(f"""

|

||||||

|

CREATE OR REPLACE TABLE {table_name} AS

|

||||||

|

SELECT

|

||||||

|

publisher_id AS publisher_id

|

||||||

|

,cast(bias_id AS int) as bias_id

|

||||||

|

FROM df

|

||||||

|

WHERE bias_id IS NOT NULL

|

||||||

|

""")

|

||||||

|

print(f"created table: {table_name}")

|

||||||

|

|

||||||

|

|

|

||||||

24

src/cli.py

|

|

@ -7,7 +7,7 @@ def cli():

|

||||||

|

|

||||||

if __name__ == "__main__":

|

if __name__ == "__main__":

|

||||||

load_dotenv()

|

load_dotenv()

|

||||||

import scrape

|

from data import scrape

|

||||||

cli.add_command(scrape.download)

|

cli.add_command(scrape.download)

|

||||||

cli.add_command(scrape.parse)

|

cli.add_command(scrape.parse)

|

||||||

cli.add_command(scrape.load)

|

cli.add_command(scrape.load)

|

||||||

|

|

@ -32,4 +32,26 @@ if __name__ == "__main__":

|

||||||

cli.add_command(emotion.create_table)

|

cli.add_command(emotion.create_table)

|

||||||

import sentence

|

import sentence

|

||||||

cli.add_command(sentence.embed)

|

cli.add_command(sentence.embed)

|

||||||

|

from train import main as train_main

|

||||||

|

cli.add_command(train_main.main)

|

||||||

|

|

||||||

|

import plots.descriptive as plotd

|

||||||

|

cli.add_command(plotd.articles_per_year)

|

||||||

|

cli.add_command(plotd.distinct_publishers)

|

||||||

|

cli.add_command(plotd.stories_per_publisher)

|

||||||

|

cli.add_command(plotd.top_publishers)

|

||||||

|

cli.add_command(plotd.common_tld)

|

||||||

|

|

||||||

|

import links as linkcli

|

||||||

|

cli.add_command(linkcli.create_table)

|

||||||

|

cli.add_command(linkcli.create_pca)

|

||||||

|

cli.add_command(linkcli.create_clusters)

|

||||||

|

|

||||||

|

import plots.links as plotl

|

||||||

|

cli.add_command(plotl.elbow)

|

||||||

|

cli.add_command(plotl.link_pca_clusters)

|

||||||

|

|

||||||

|

import plots.classifier as plotc

|

||||||

|

cli.add_command(plotc.pca_with_classes)

|

||||||

|

|

||||||

cli()

|

cli()

|

||||||

|

|

|

||||||

|

|

@ -0,0 +1,6 @@

|

||||||

|

import data.main

|

||||||

|

import data.scrape

|

||||||

|

__all__ = [

|

||||||

|

'main'

|

||||||

|

,'scrape'

|

||||||

|

]

|

||||||

|

|

@ -4,10 +4,12 @@ import requests

|

||||||

from pathlib import Path

|

from pathlib import Path

|

||||||

import click

|

import click

|

||||||

from tqdm import tqdm

|

from tqdm import tqdm

|

||||||

from data import data_dir, connect

|

from data.main import data_dir, connect

|

||||||

from lxml import etree

|

from lxml import etree

|

||||||

import pandas as pd

|

import pandas as pd

|

||||||

from urllib.parse import urlparse

|

from urllib.parse import urlparse

|

||||||

|

from tld import get_tld

|

||||||

|

from tld.utils import update_tld_names

|

||||||

|

|

||||||

@click.command(name='scrape:load')

|

@click.command(name='scrape:load')

|

||||||

@click.option('--directory', type=Path, default=data_dir(), show_default=True)

|

@click.option('--directory', type=Path, default=data_dir(), show_default=True)

|

||||||

|

|

@ -61,6 +63,7 @@ def download(output_dir):

|

||||||

@click.option('-o', '--output_dir', type=Path, default=data_dir(), show_default=True)

|

@click.option('-o', '--output_dir', type=Path, default=data_dir(), show_default=True)

|

||||||

def parse(directory, output_dir):

|

def parse(directory, output_dir):

|

||||||

"""parse the html files on disk into a structured csv format."""

|

"""parse the html files on disk into a structured csv format."""

|

||||||

|

update_tld_names()

|

||||||

directory = data_dir() / "memeorandum"

|

directory = data_dir() / "memeorandum"

|

||||||

parser = etree.HTMLParser()

|

parser = etree.HTMLParser()

|

||||||

pages = [f for f in directory.glob("*.html")]

|

pages = [f for f in directory.glob("*.html")]

|

||||||

|

|

@ -104,8 +107,7 @@ def parse(directory, output_dir):

|

||||||

|

|

||||||

url = item.xpath('.//strong/a')[0].get('href')

|

url = item.xpath('.//strong/a')[0].get('href')

|

||||||

out['url'] = url

|

out['url'] = url

|

||||||

out['publisher_url_domain'] = urlparse(publisher_url).netloc

|

out['tld'] = get_tld(publisher_url)

|

||||||

out['domain'] = urlparse(url).netloc

|

|

||||||

|

|

||||||

item_id = hash((page.stem, url))

|

item_id = hash((page.stem, url))

|

||||||

out['id'] = item_id

|

out['id'] = item_id

|

||||||

|

|

@ -225,3 +227,111 @@ def normalize():

|

||||||

alter table related_stories drop publisher_domain;

|

alter table related_stories drop publisher_domain;

|

||||||

""")

|

""")

|

||||||

|

|

||||||

|

|

||||||

|

def another_norm():

|

||||||

|

sv2 = pd.read_csv(data_dir / 'stories.csv', sep="|")

|

||||||

|

related = pd.read_csv(data_dir / 'related.csv', sep="|")

|

||||||

|

|

||||||

|

related['tld'] = related.url.apply(lambda x: map_tld(x))

|

||||||

|

|

||||||

|

DB.query("""

|

||||||

|

update related_stories

|

||||||

|

set publisher_id = p.id

|

||||||

|

from publishers p

|

||||||

|

join related r

|

||||||

|

on r.tld = p.tld

|

||||||

|

where r.url = related_stories.url

|

||||||

|

""")

|

||||||

|

|

||||||

|

|

||||||

|

DB.query("""alter table stories add column tld text""")

|

||||||

|

|

||||||

|

s_url = DB.query("""

|

||||||

|

select

|

||||||

|

id

|

||||||

|

,url

|

||||||

|

from stories

|

||||||

|

""").df()

|

||||||

|

|

||||||

|

|

||||||

|

s_url['tld'] = s_url.url.apply(lambda x: map_tld(x))

|

||||||

|

|

||||||

|

DB.query("""

|

||||||

|

update stories

|

||||||

|

set tld = s_url.tld

|

||||||

|

from s_url

|

||||||

|

where s_url.id = stories.id

|

||||||

|

""")

|

||||||

|

|

||||||

|

DB.query("""

|

||||||

|

update stories

|

||||||

|

set publisher_id = p.id

|

||||||

|

from publishers p

|

||||||

|

where p.tld = stories.tld

|

||||||

|

""")

|

||||||

|

|

||||||

|

|

||||||

|

select

|

||||||

|

DB.query("""

|

||||||

|

update stories

|

||||||

|

set stories.publisher_id = p.id

|

||||||

|

from new_pub

|

||||||

|

""")

|

||||||

|

sv2['tld'] = sv2.publisher_url.apply(lambda x: map_tld(x))

|

||||||

|

|

||||||

|

|

||||||

|

new_pub = DB.query("""

|

||||||

|

with cte as (

|

||||||

|

select

|

||||||

|

tld

|

||||||

|

,publisher

|

||||||

|

,count(1) filter(where year(published_at) = 2022) as recent_ctn

|

||||||

|

,count(1) as ctn

|

||||||

|

from sv2

|

||||||

|

group by

|

||||||

|

tld

|

||||||

|

,publisher

|

||||||

|

)

|

||||||

|

,r as (

|

||||||

|

select

|

||||||

|

tld

|

||||||

|

,publisher

|

||||||

|

,ctn

|

||||||

|

,row_number() over(partition by tld order by recent_ctn desc) as rn

|

||||||

|

from cte

|

||||||

|

)

|

||||||

|

select

|

||||||

|

row_number() over() as id

|

||||||

|

,publisher as name

|

||||||

|

,tld

|

||||||

|

from r

|

||||||

|

where rn = 1

|

||||||

|

order by ctn desc

|

||||||

|

""").df()

|

||||||

|

|

||||||

|

DB.query("""

|

||||||

|

CREATE OR REPLACE TABLE publishers AS

|

||||||

|

SELECT

|

||||||

|

id

|

||||||

|

,name

|

||||||

|

,tld

|

||||||

|

FROM new_pub

|

||||||

|

""")

|

||||||

|

|

||||||

|

|

||||||

|

def map_tld(x):

|

||||||

|

try:

|

||||||

|

res = get_tld(x, as_object=True)

|

||||||

|

return res.fld

|

||||||

|

except:

|

||||||

|

return None

|

||||||

|

|

||||||

|

DB.sql("""

|

||||||

|

SELECT

|

||||||

|

s.id

|

||||||

|

,sv2.publisher_url

|

||||||

|

FROM stories s

|

||||||

|

JOIN sv2

|

||||||

|

on sv2.id = s.id

|

||||||

|

limit 5

|

||||||

|

""")

|

||||||

|

|

@ -6,7 +6,7 @@ import numpy as np

|

||||||

|

|

||||||

from transformers import BertTokenizer

|

from transformers import BertTokenizer

|

||||||

from model import BertForMultiLabelClassification

|

from model import BertForMultiLabelClassification

|

||||||

from data import connect, data_dir

|

from data.main import connect, data_dir

|

||||||

import seaborn as sns

|

import seaborn as sns

|

||||||

import matplotlib.pyplot as plt

|

import matplotlib.pyplot as plt

|

||||||

from matplotlib.dates import DateFormatter

|

from matplotlib.dates import DateFormatter

|

||||||

|

|

@ -376,3 +376,99 @@ def debug():

|

||||||

DB.close()

|

DB.close()

|

||||||

|

|

||||||

out.to_csv(data_dir() / 'emotions.csv', sep="|")

|

out.to_csv(data_dir() / 'emotions.csv', sep="|")

|

||||||

|

|

||||||

|

def another():

|

||||||

|

DB = connect()

|

||||||

|

DB.sql("""

|

||||||

|

select

|

||||||

|

*

|

||||||

|

from emotions

|

||||||

|

""")

|

||||||

|

|

||||||

|

emotions = DB.sql("""

|

||||||

|

select

|

||||||

|

year(s.published_at) as year

|

||||||

|

,se.label as emotion

|

||||||

|

,count(1) as stories

|

||||||

|

from stories s

|

||||||

|

join story_emotions se

|

||||||

|

on s.id = se.story_id

|

||||||

|

group by

|

||||||

|

year(s.published_at)

|

||||||

|

,se.label

|

||||||

|

""").df()

|

||||||

|

|

||||||

|

sns.scatterplot(x=emotions['year'], y=emotions['stories'], hue=emotions['emotion'])

|

||||||

|

plt.show()

|

||||||

|

|

||||||

|

pivot = emotions.pivot(index='year', columns='emotion', values='stories')

|

||||||

|

pivot.reset_index(inplace=True)

|

||||||

|

from sklearn.linear_model import LinearRegression

|

||||||

|

reg = LinearRegression()

|

||||||

|

|

||||||

|

for emotion in pivot.keys()[1:].tolist():

|

||||||

|

_ = reg.fit(pivot['year'].to_numpy().reshape(-1, 1), pivot[emotion])

|

||||||

|

print(f"{emotion}: {reg.coef_[0]}")

|

||||||

|

|

||||||

|

fig, ax = plt.subplots()

|

||||||

|

#sns.lineplot(x=pivot['anger'], y=pivot['joy'])

|

||||||

|

#sns.lineplot(x=pivot['anger'], y=pivot['surprise'], ax=ax)

|

||||||

|

sns.lineplot(x=pivot['anger'], y=pivot['fear'], ax=ax)

|

||||||

|

sns.lineplot(x=pivot[''], y=pivot['fear'], ax=ax)

|

||||||

|

plt.show()

|

||||||

|

|

||||||

|

DB.close()

|

||||||

|

|

||||||

|

normalized = DB.sql("""

|

||||||

|

with cte as (

|

||||||

|

select

|

||||||

|

year(s.published_at) as year

|

||||||

|

,se.label as emotion

|

||||||

|

,b.label as bias

|

||||||

|

from stories s

|

||||||

|

join story_emotions se

|

||||||

|

on s.id = se.story_id

|

||||||

|

join publisher_bias b

|

||||||

|

on b.id = s.publisher_id

|

||||||

|

where b.label != 'allsides'

|

||||||

|

and se.label != 'neutral'

|

||||||

|

)

|

||||||

|

select

|

||||||

|

distinct

|

||||||

|

year

|

||||||

|

,emotion

|

||||||

|

,bias

|

||||||

|

,cast(count(1) over(partition by year, bias, emotion) as float) / count(1) over(partition by year, bias) as group_count

|

||||||

|

from cte

|

||||||

|

""").df()

|

||||||

|

|

||||||

|

DB.sql("""

|

||||||

|

select

|

||||||

|

b.label as bias

|

||||||

|

,count(1) as stories

|

||||||

|

from stories s

|

||||||

|

join story_emotions se

|

||||||

|

on s.id = se.story_id

|

||||||

|

join publisher_bias b

|

||||||

|

on b.id = s.publisher_id

|

||||||

|

group by

|

||||||

|

b.label

|

||||||

|

""").df()

|

||||||

|

|

||||||

|

another_pivot = emotional_bias.pivot(index=['bias', 'year'], columns='emotion', values='stories')

|

||||||

|

another_pivot.reset_index(inplace=True)

|

||||||

|

|

||||||

|

sns.lineplot(data=normalized, x='year', y='group_count', hue='bias', style='emotion')

|

||||||

|

plt.show()

|

||||||

|

|

||||||

|

sns.relplot(

|

||||||

|

data=normalized, x="year", y="group_count", hue="emotion", col='bias', kind="line"

|

||||||

|

#data=normalized, x="year", y="group_count", hue="emotion", col='bias', kind="line", facet_kws=dict(sharey=False)

|

||||||

|

)

|

||||||

|

plt.show()

|

||||||

|

|

||||||

|

DB.sql("""

|

||||||

|

select

|

||||||

|

*

|

||||||

|

from another_pivot

|

||||||

|

""")

|

||||||

|

|

|

||||||

|

|

@ -1,8 +0,0 @@

|

||||||

import sklearn

|

|

||||||

import polars as pl

|

|

||||||

import toml

|

|

||||||

from pathlib import Path

|

|

||||||

|

|

||||||

config = toml.load('/home/user/577/repo/config.toml')

|

|

||||||

app_dir = Path(config.get('app').get('path'))

|

|

||||||

df = pl.read_csv(app_dir / "data/articles.csv")

|

|

||||||

158

src/links.py

|

|

@ -1,12 +1,148 @@

|

||||||

from data import connect

|

import click

|

||||||

|

from data.main import connect

|

||||||

import pandas as pd

|

import pandas as pd

|

||||||

import numpy as np

|

import numpy as np

|

||||||

from sklearn.decomposition import PCA, TruncatedSVD

|

|

||||||

from sklearn.cluster import MiniBatchKMeans

|

|

||||||

import seaborn as sns

|

import seaborn as sns

|

||||||

import matplotlib.pyplot as plt

|

import matplotlib.pyplot as plt

|

||||||

|

|

||||||

|

|

||||||

|

@click.command('links:create-table')

|

||||||

|

def create_table():

|

||||||

|

|

||||||

|

table_name = "top.link_edges"

|

||||||

|

DB = connect()

|

||||||

|

DB.query(f"""

|

||||||

|

CREATE OR REPLACE TABLE {table_name} AS

|

||||||

|

with cte as(

|

||||||

|

SELECT

|

||||||

|

s.publisher_id as parent_id

|

||||||

|

,r.publisher_id as child_id

|

||||||

|

,count(1) as links

|

||||||

|

FROM top.stories s

|

||||||

|

JOIN top.related_stories r

|

||||||

|

ON s.id = r.parent_id

|

||||||

|

group by

|

||||||

|

s.publisher_id

|

||||||

|

,r.publisher_id

|

||||||

|

)

|

||||||

|

SELECT

|

||||||

|

cte.parent_id

|

||||||

|

,cte.child_id

|

||||||

|

,cte.links as links

|

||||||

|

,cast(cte.links as float) / sum(cte.links) over(partition by cte.parent_id) as normalized

|

||||||

|

,case when cte.links > 0 then 1 else 0 end as onehot

|

||||||

|

FROM cte

|

||||||

|

WHERE cte.child_id in (

|

||||||

|

SELECT

|

||||||

|

distinct parent_id

|

||||||

|

FROM cte

|

||||||

|

)

|

||||||

|

AND cte.parent_id in (

|

||||||

|

SELECT

|

||||||

|

distinct child_id

|

||||||

|

FROM cte

|

||||||

|

)

|

||||||

|

""")

|

||||||

|

DB.close()

|

||||||

|

|

||||||

|

DB = connect()

|

||||||

|

DB.query("""

|

||||||

|

SELECT

|

||||||

|

*

|

||||||

|

,-log10(links)

|

||||||

|

--distinct parent_id

|

||||||

|

FROM top.link_edges e

|

||||||

|

WHERE e.parent_id = 238

|

||||||

|

""")

|

||||||

|

DB.close()

|

||||||

|

print(f"created {table_name}")

|

||||||

|

|

||||||

|

@click.command('links:create-pca')

|

||||||

|

@click.option('--source', type=click.Choice(['links', 'normalized', 'onehot']), default='links')

|

||||||

|

def create_pca(source):

|

||||||

|

"""create 2D pca labels"""

|

||||||

|

|

||||||

|

from sklearn.decomposition import PCA

|

||||||

|

|

||||||

|

table_name = f"top.publisher_pca_{source}"

|

||||||

|

DB = connect()

|

||||||

|

pub = DB.query("""

|

||||||

|

SELECT

|

||||||

|

*